Duplicate Content: Why It Happens and How to Fix It

Duplicate content is a big issue for Google indexing and is a source of headache for website owners.

The most annoying part is that you may be completely unaware that some of your web pages have duplicates, which is why this is a worthwhile read.

In this ultimate guide to fixing duplicate content, I’ll describe what duplicate content is, what causes it, how it affects your SEO, and the best practices to get rid of duplicates.

If ranking high on Google search engine result pages means a lot to you, then you should keep on reading.

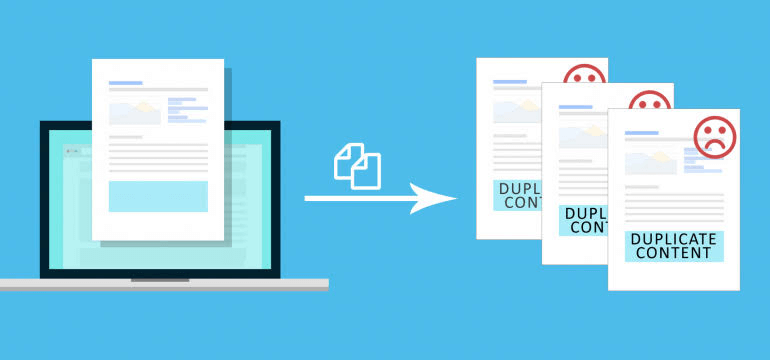

What Is Duplicate Content?

Content is said to be duplicated when the same replica or something close to it exists on the internet in the same language and more than one location.

It’s the same content or something similar on multiple web pages.

It could be cross-domain or on the same website.

Most of the time, this occurrence is not deliberate or deceptive and could arise from a technical mishap.

However, in some cases, website owners duplicate their content intentionally so that they can get more traffic or manipulate SEO rankings.

Besides confusing search engines, it brings no additional value to your visitors as the user experience is poor. They view repeats of the same content or substantial content blocks in the search result pages.

Pages that have no or little body content can also be called duplicate content.

Two types of duplicate content exist—onsite and off-site duplicates.

- Onsite duplicates are the same content present on more than one unique web address or URL on your platform. This type of duplicate content can be checked by your web developer and website admin.

- Off-site duplicates are duplicates published by more than one website. This type of duplicate cannot be directly controlled and needs a third party working with the offending website owners to handle it.

The result of duplicate content is that your contents confuse search engine robots, so, search engines like Google don’t know which website to rank higher on the search engine results pages.

Duplicate Content – Impact on SEO?

If you’re improving your SEO metrics or you have them already, duplicate content is harmful.

Here are some reasons why:

- Unfriendly or undesirable web addresses in the search results;

- Affects the crawl budget;

- Backlink dilution;

- Syndicated and scraped content outranking your website;

- Lower rankings.

How do the following affect your SEO?

1. Unfriendly or undesirable web addresses in the search results

If a web page on your platform has three different web addresses like so:

- com/page/

- com/page/?utm_content=buffer&utm_medium=social

- com/category/page/

The first is ideal and should be the one indexed by Google.

However, because there are other alternatives with the same content, if Google displays the other URLs, you’ll get fewer visitors because the other two URLs are unfriendly and not as enticing as the first.

2. Backlink Dilution

Backlinks are a good source for boosting SEO rankings.

That’s why you should run away from anything that can weaken this potent SEO arsenal.

When you have the same content on multiple web addresses, all of those addresses attract backlinks.

Therefore, the various URLs share this “link equity”.

3. Crawl Budget

Through the mechanism of crawling, Google locates updates in your content by following URLs from your older pages to the latest ones.

Google also recrawls pages they have crawled previously to take note of any changes in the contents.

When you have duplicates on your website, the frequency and speed with which Google robots crawl your updated and new content are reduced.

That culminates in the indexing and reindexing of new and updated content.

4. Your Website Is Outranked by Scrapped Content

When other websites seek your permission and republish the content on your web pages, it’s called syndication.

However, in some situations, website owners can just duplicate your content without your permission.

Each one of these scenarios can lead to duplicate content, and when these websites outrank your website, problems begin to surface.

5. Lower Rankings

Duplicate content ultimately leads to lower rankings on search engine results pages.

That is because when you have duplicate content on multiple web pages, search engine robots will be confused about which URL to suggest to potential visitors.

Therefore, search engines will only display yours after the other relevant websites have been shown, which means lower rankings for you.

Duplicate Content – Causes

There are a lot of reasons why duplicate content arises. Most of the time, duplicate content arises as a result of unnatural reasons like technical problems.

These technical issues arise at the level of developers. Here are a couple of reasons why duplicate content comes up.

1. URL Misconception

This technical issue arises when your website developer powers your website in such a way that even though there’s only a single version of that article in the database, the website software permits it to be assessed from more than one web address or URL.

Put another way, the database has only one article, which your developer identifies with the unique ID.

However, whenever users make a search query relating to that article, the search engine crawls and indexes that content through multiple URLs, which, for the search engine, is the unique identifier for that content.

Solution to Cause

2. WWW vs. non-WWW and HTTPS vs. HTTP

Take a look at these URLs:

- http://abcdef.com – HTTP, non-www variant.

- http://www.abcdef.com – HTTP, www variant.

- https://abcdef.com – HTTPS, non-www variant.

- https://www.abcdef.com – HTTPS, www variant.

Visitors can visit almost all websites through any of the four URL variations above.

You can use HTTPS or the less secure HTTP version, which can be either “www” or “non-www”.

If your website developer doesn’t adequately set up your website server, users can access your content through two or more of the above alternatives, which can cause problems with content duplication.

Solution to Cause

- Apply redirects to your website so that visitors can enter your platform through one location only.

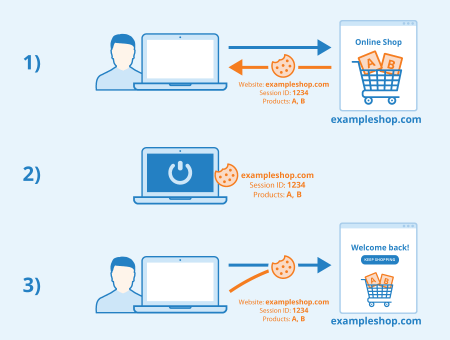

3. Session IDs

Session IDs are a way for website owners to track and store information about visitors to their website.

This technical error is commonly found in online stores where each visit, called a “session,” is created and stored to track details such as the visitors’ activities on your website and the items they put in the shopping cart.

The unique identifier for that session is what is called a “session ID,” and it is unique to that particular session.

The session ID is added to the URL, and that creates another URL.

A session URL usually looks like this:

- Example: com?sessionId=huy4567562pmkb5832

Therefore, to summarize, session IDs track and store your visitors’ activities by adding a long string of alphanumeric codes to the URL.

Even though this seems like a worthy cause, it still counts as duplicate content, sadly.

Solution to Cause

- Use canonical URLs to the original web page that is SEO-friendly.

4. Sorting and Tracking Parameters

When website owners and developers want to sort and track certain parameters, they make use of a type of URL called “parameterized URLs.”

A good example is the UTM parameterized URL in Google Analytics that tracks the visits of users from a newsletter campaign.

They can look like this:

Example of original URL:

- http://www.abcdef.com/page

An example of a UTM parameterized URL:

- http://www.abcdef.com/page?utm_source=newsletter

Even though URL parameters serve some good purposes, like displaying another sidebar or altering the sort setting for some items, they, of course, do not change your web page’s content.

Therefore, because the content of the two web addresses is the same, to search engines, UTM counts as duplicate content.

Solution to Cause

- Use canonical URLs for all the parameterized links on your website to the SEO-friendly variants without the parameters for sorting and tracking.

5. URL Parameter Order

The order in which URL parameters are arranged also contributes to duplicate content issues.

Example:

- In the CMS, URLs are created in the form of /?P1=1&P2=2 or /?P2=2&P1=1.

P1 and P2 stand for Parameter 1 and Parameter 2, respectively.

Even though the CMS sees the two URLs as the same since they offer the same results, search engines see them as two different URLs, flagging them as duplicate content.

Solution to Cause

- Your developer or programmer should use the same consistent approach to order parameters.

6. Content Syndication and Scrapers

This issue arises when your content is used by other website owners without your permission and it is common with popular websites.

They don’t link to your website, and search engines just see the same or almost the same content on multiple pages.

Solution to Cause

- Reach out to the webmaster of the website involved and ask for removal or accreditation.

- Self-referencing canonical URLs.

7. Comment Pagination

This problem arises when your CMS, like WordPress, paginates the comments on the affected web page.

This creates multiple versions of the same web address and content.

Example:

- com/post/

- com/post/comment-page-2

- com/post/comment-page-3

Solution to Cause

- Turn comment pagination off

- Use a plug-in to noindex your paginated pages. Yoast is a good one.

8. Printer-Friendly Pages

When you have printer-friendly web pages, those pages contain the same content as the original pages but have different URLs.

Example:

- com/page/

- com/print/page/

When a user searches and Google is indexing websites related to the search query, both versions of the same web page will be indexed by Google, except if you block the printer-friendly versions.

Solution to Cause

- Use canonical URLs to convert the printer-friendly version into the original version.

9. Mobile-Friendly Pages

Mobile-friendly pages have the same problem as printer-friendly pages. Both are duplicate content and are flagged by search engines.

Example:

- com/page/ – original content

- abcdef.com/page/ – mobile-friendly URL

Solution to Cause

- Use canonical URLs for the mobile-friendly version.

- Employ rel=“alternate” to inform search engines that the mobile-friendly link is a variant of the desktop link.

10. Accelerated Mobile Pages

Accelerated mobile pages that help web pages load faster are also duplicate content.

Example:

- com/page/

- com/amp/page/

Solution to Cause

- Use canonical URLs to convert the AMP version into the non-AMP variant.

- You can also employ a self-referencing canonical tag.

11. Localization

When your website serves people in different regions but the same language, it creates room for duplicate content.

For instance, your website serves audiences in the UK, US, and Australia, and you create different versions for them.

These website variants will contain virtually the same information, besides currency differences and other specifics, and therefore, still count as duplicate content.

It’s important to point out that content that is translated into another language doesn’t count as duplicate content.

Solution to Cause

- Hreflang tags are very effective in telling Google the relationship between the alternate websites.

12. Attachment URLs for Images

Some websites have image attachments with dedicated web pages.

These dedicated web pages only show the image and nothing else, and this leads to duplicate content because the duplicate content exists across all the auto-generated pages.

Solution to Cause

- Turn off the dedicated pages feature for your CMS images.

- If it’s WordPress, use Yoast or any other plug-in.

13. Staging Environment

In some scenarios, like when you want to change something on your website or install a new plug-in, it’s necessary to test your website.

You don’t want to do this on your live website, especially when you have a massive number of visitors daily.

The risk of something going awry is too high.

Enter the staging environment.

Staging environments are another version of your website, which may be a replica of the website or something slightly different than what you use for testing functions.

This becomes problematic when Google identifies these alternate URLs and indexes them.

Solution to Cause

- HTTP authentication is a good way to guard your staging environment.

- VPN access and IP whitelisting are also effective.

- Apply the robot’s noindex command if the link is indexed already so that it can be taken out.

14. Case-Sensitive URLs

Google is case-sensitive to URLs.

Example:

- com/page

- com/PAGE

- com/page

All of these URLs are distinct and treated differently by Google.

Solution to Cause

- If you’re using internal links, use a consistent format. Don’t use internal links for multiple URL variants.

- Use canonical URLs.

- Use redirects.

Conclusion

Duplicate content creates a lot of confusion during Google indexing and, besides lower rankings for your website, duplicate content can affect user experience.

That is why you should apply the methods above and rid yourself of duplicates once and for all.

Canonical URLs are a good place to start.

How To Develop A Link Building Strategy For Your Website

Link building is important for search engine optimization. You should definitely include link building in your SEO strategy. However, link building should also be part of your growth strategy. In addition to driving traffic to your website, it can also attract new audiences.

Be careful not to engage in any negative practices. These may seem tempting, but they can damage your rankings in the long run. The purpose of this post is to share some tips on how you can create a successful link-building strategies campaign. Get the right audience and the proper links to your website with the right strategy.

What Is A Link-Building Strategy?

A link-building strategy is a process by which webmasters collect references from other websites that links back to their own content. A strong profile of backlinks-links from other websites back to one’s own content–is one of Google’s most important ranking signals.

The more people link to your website, the more social proof it is that your website is relevant. While you can organically get quality backlinks from publishing strong content, you can also intentionally use strategies to build them.

Why Link-Building Strategy Is Important:

Link building is one of the major ranking factors used by search engines, which determines who appears on the first page of search results.

According to Google, pages in the top position in the SERPs have an average of 3.8 times more backlinks than pages in spots 2-10.

As part of their ranking algorithms, Google, Bing, Yahoo, and other search engines look at the number of links that point to your site (and the quality of those links).

Additionally, links to your website enable users to find your site and increase traffic and trust. When other sites believe you know what you’re talking about, users are more likely to trust you.

Successful Link-Building Strategies:

Get To Know Your Audience

It’s essential to know two things if you want to draw more people to your website: who your audience is right now and who your ideal audience looks like.

By doing so, you will be able to retain and expand your current audience and reach new audiences who are interested in what you are offering. Take the time to find out who your audience is.

You can use this information to better understand them and determine who your target audience is and if you are reaching them today.

Here’s a list of sites you should check out.

Once you identify your desired audience, you need to create a list of websites that can assist you in reaching them. Identify which websites already appeal to your target audience. Your website can benefit from links from these websites to reach people who might be interested in it but don’t know about it yet.

Please remember not every website should link to yours, so take care not to overdo it. Spam links or links from websites that have absolutely nothing to do with your niche are not valuable. It can even backfire and damage your search engine ranking.

Write Great Content

To make other websites want to link to your page, you need to create content that makes them want to do so. If you want to achieve this goal, you should produce quality content.

Take the time to consider what will appeal to your audience, what they want to know, and what you can offer that is unique. Make sure you do more than just explain why your products are so great and why your customers should definitely get them.

Organize your content around answering an audience question or solving a problem they’re encountering. If you provide the information they’re looking for, you’ll build trust and get more links as other sites will perceive the value in your content.

Match Content To Websites

After you are satisfied with your content, it is time to examine the list of websites you have created in the second step. Where can readers find your content? Which websites will likely link to it? Creating content just to send to every site on your list may seem like a good idea, but then you’ll probably get fewer links to your content. You will come across as spammy if you make people read 5 blog posts in order to decide which one they like.

Make sure you choose wisely and find sites that relate specifically to your blog post or page. Due to the relevance of your blog post to their content, they may be more inclined to link to it. The visitors to your website that follow that link are more likely to be interested in your content, resulting in a higher chance of conversion and recurring traffic.

Write Guest Posts

Guest posting can help you build credibility and bring your brand in front of new audiences, but if you can include a link to your website within the content, it can also boost your search engine rankings.

Out Reach

When you’ve decided which website(s) to contact, you need to contact them. An email is a great way to contact someone, but Twitter is another great choice. Getting a backlink from a website that caters to your audience increases your chances of getting it.

The personal touch helps you reach out to the people you want to reach out to. Never direct message or send automated emails. Tell them about your content and ask them to place a link to your content in a polite email. It is important to note that you won’t always receive a reply.

The more you can explain why your content is unique, the better your chances of getting a link.

Use Social Media!

You can link your content by reaching out to specific people or websites. The use of social media is also a great way to get links and reach new audiences simultaneously.

Post your new content on social media sites like Twitter to reach new audiences. Tweet your new content to specific people who may enjoy it. Also, Facebook gives you the option to promote your content and reach new people by promoting your articles. You are bound to receive additional links if people like, share, and talk about your content on social media.

(Best Practices)How to Do Local SEO?

Local search engine optimization is one of the best marketing tools that can be used to bring in new customers. Here, you will discover how to maximize your ranking, traffic, and lead generation.

Local SEO is an indispensable marketing tool for businesses seeking to attract potential customers, given that 90% of consumers search for local businesses online.

Localized searches and user targeting is essential whether your business has a physical location or an online presence that serves a particular region. With this strategy, you can outrank your competitors and attract more customers by ranking higher in Google searches.

We will show you how to generate more traffic from local searches using a local SEO strategy for any business.

How to Do Local SEO | Follow Some Steps

When you decide that your website needs local SEO, you need to plan to increase your rankings and traffic in your local area.

This guide will show you how to create a winning local SEO campaign in 10 steps.

1. Take an inventory of your products and services

Choose the keywords that you would like your business to rank for. Service and product offerings will be a primary determinant.

You could be offering social media marketing, Facebook marketing, paid advertising, etc., if you run a local digital marketing agency.

If you own a restaurant, you might consider putting the various things you offer, including “Chinese food,” “Chinese restaurant,” or “taco place,” under the same umbrella.

The objective here is to determine key search terms customers may use to find a business like yours by considering what your business offers and identifying key terms they may use to find your business. Make a list of these terms so your keyword research can begin from a starting point.

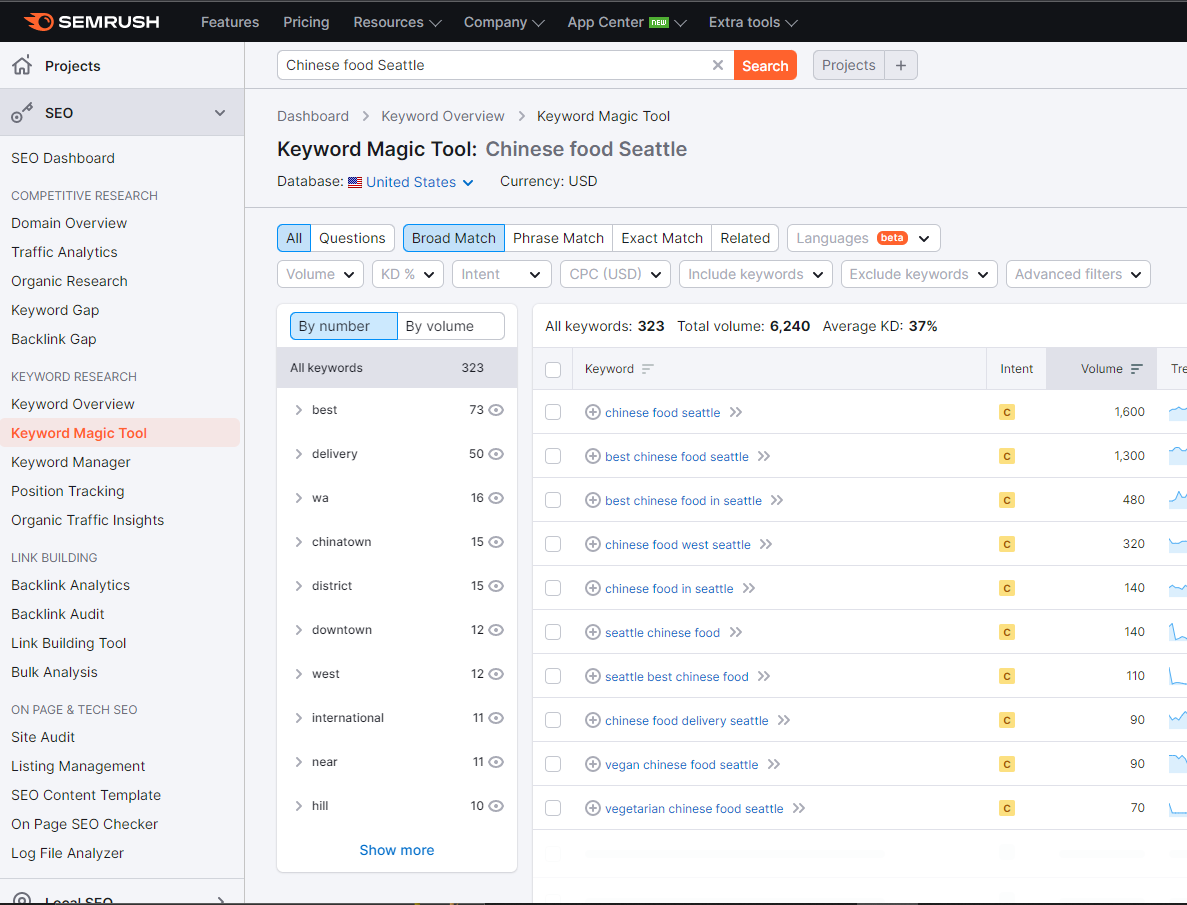

The next step is to use local SEO tools to find out which terms are being searched for locally, identify geo-specific keywords, and assess search volume, guiding your decision as to which keywords to target in your online marketing strategy.

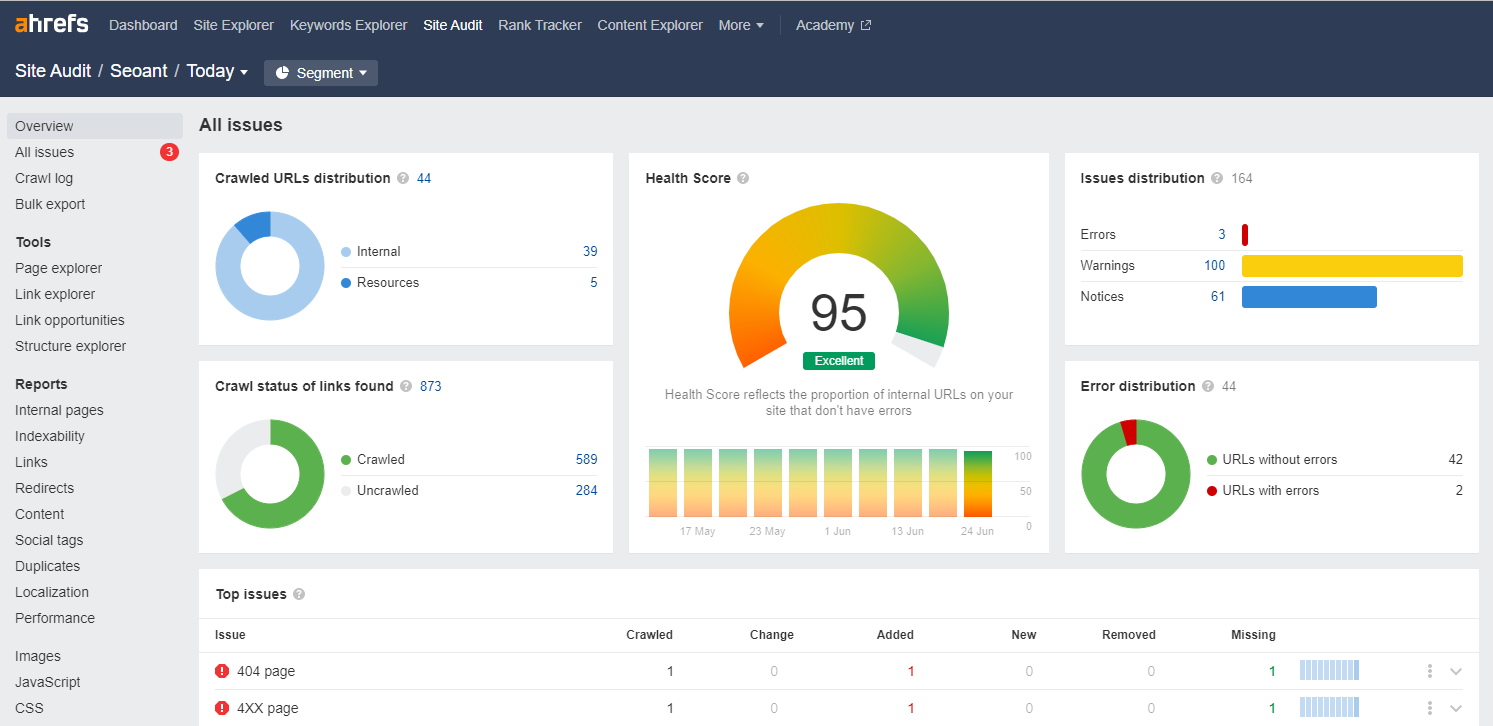

2. Analyze your current website.

You should first determine what’s broken on your site (if anything) and whether the structure of your site is sound before attempting optimization.

You can identify any technical or on-page SEO problems that may be affecting your website’s performance with an SEO audit. You must first deal with these issues before you begin creating new SEO articles or backlinks.

You can create a detailed report of your site’s issues using SEO tools like Ahref, Semrush, etc.

A specialist in search engine optimization can thoroughly analyze your website and offer pointers on how to improve it.

Below are a few concerns to keep in mind:

- Poor site performance.

- The page title is missing.

- Meta descriptions are missing.

- Links are broken.

- Content is duplicated.

- A sitemap is not available.(XML)

- A secure connection is not available. (HTTP)

- Indexation is weak.

- The mobile site is not optimized well.

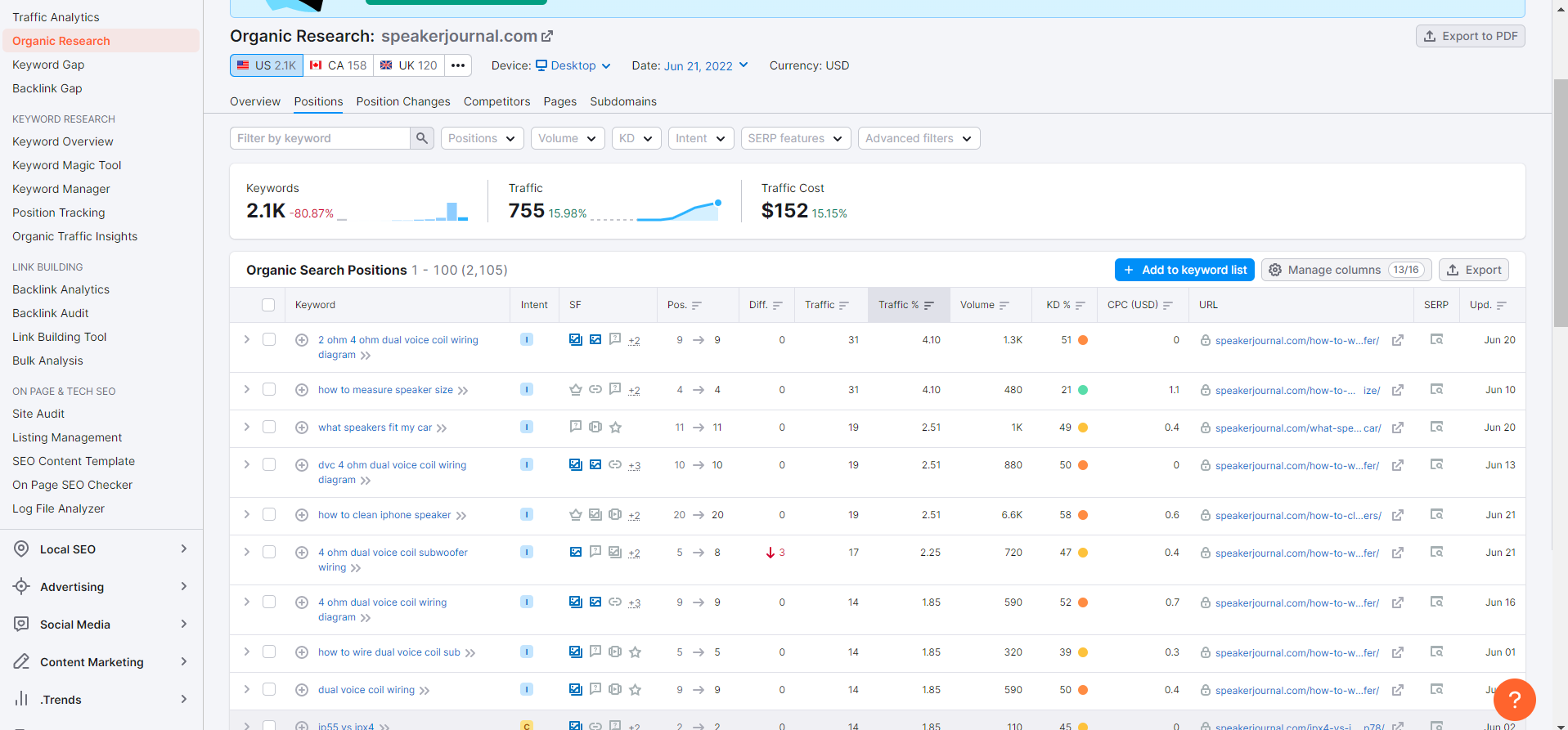

3. Find out what keyword competitors are using.

Local SEO is one of the goals of local search optimization in that it enables you to rank higher than your competitors in local search results. It is essential to know the keywords they rank for to achieve this and how they rank for such keywords.

Semrush and Ahrefs are SEO tools that can help you analyze your competitors’ rankings and backlinks to see their performance on specific keywords. It is also possible to determine what keywords your site currently ranks for.

To see what keywords your competitors are ranking for naturally, simply search for their domain in your chosen SEO tool.

To determine if your website is a suitable fit for these keywords, you can view the search volume and competition metrics.

You will want to make sure all of the keywords you choose are related to your business, the services and products you offer, what your potential customers are searching for, and the area in which you are operating.

The traffic you generate for your site should be localized and relevant.

4. Select keywords relevant to your local area.

Imagine you own a Chinese restaurant in Seattle, Washington. Let’s say you sell a lot of food in the restaurant.

In step 1, you listed keywords to describe the products or services you offer. Step 2 was finding out what keywords your competitors are using.

With these two lists combined, you can use SEO tools to find out what terms are searched for and their competition levels. When searching for keywords, it is also possible to add your location to the keyword list is possible.

- best Chinese food in Seattle

- best Chinese food in Seattle

- Chinese food west Seattle

- Chinese food in Seattle

- Seattle Chinese food

- Seattle’s best Chinese food

As you perform keyword searches like this, keep searching until you have a comprehensive list of as many localized, relevant keywords as possible.

There are many variations of the product or service you offer and the different areas in which you serve, so make sure you search for those as well.

5. Apply on-page optimization techniques.

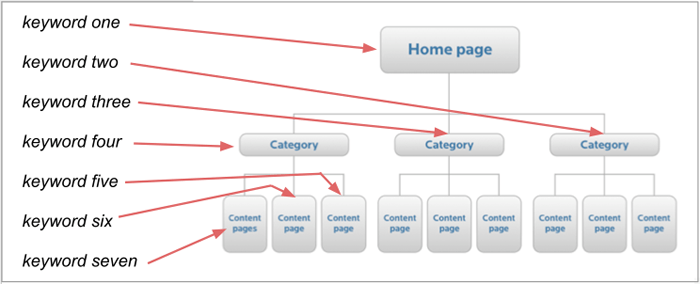

Localized keywords are the keywords you identified in steps 2-4 that should be optimized for your site on-page.

To make your website search engine friendly, follow on-page SEO best practices.

Here are some things you should do:

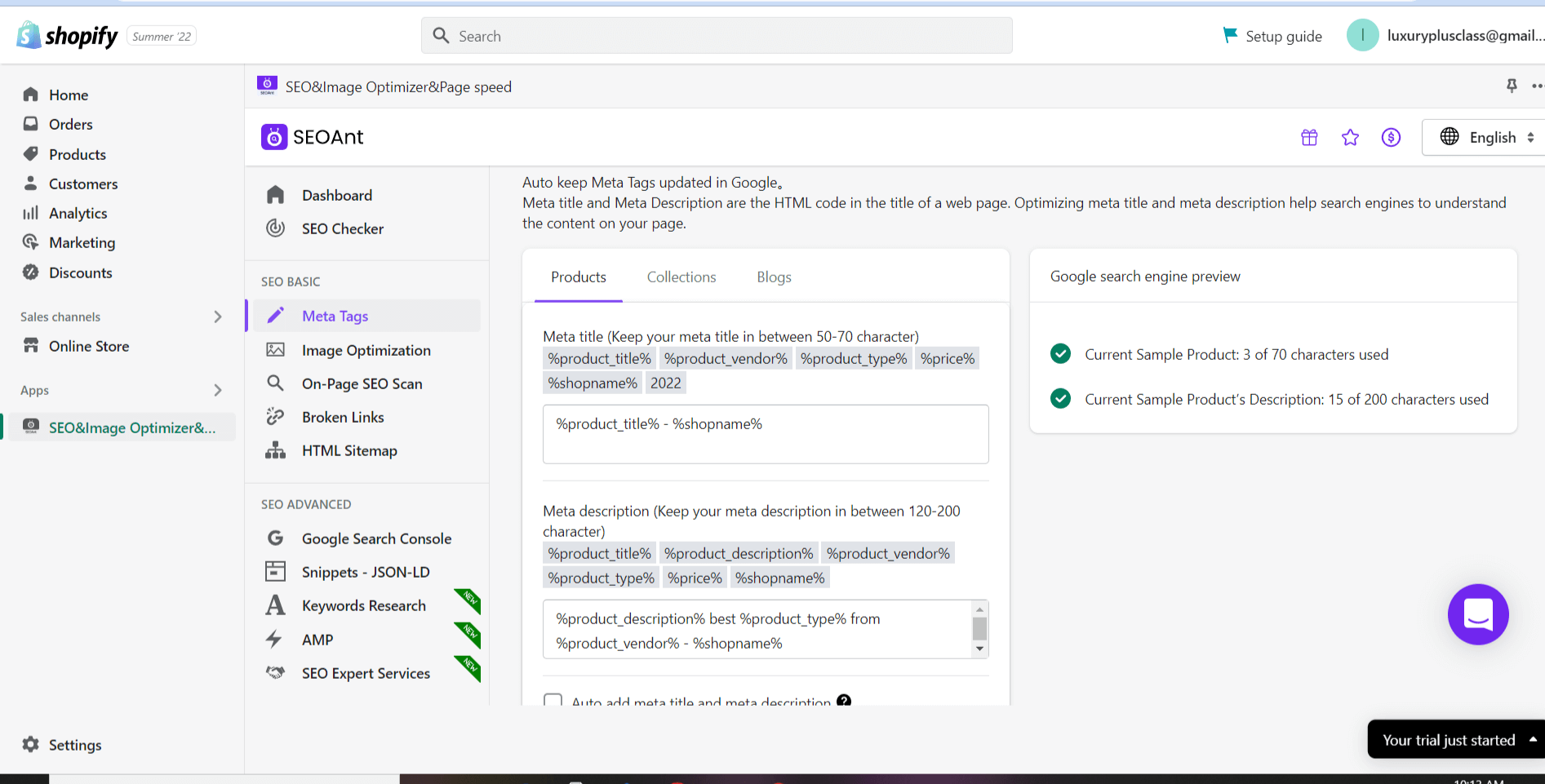

- Map your keywords: It is very important to map your target words to each page of your website. It is ideal for every page to have a target keyword relevant to that page’s content.

- Optimizing titles and Meta descriptions: Your website’s page titles and meta descriptions should include the keywords you want to target. The title and description of each page on your website should contain the keywords you want to target.

- Content Creation: Content writing that describes your website’s services and products in an informative and keyword-optimized way. Make sure you include your target keywords throughout your writing while keeping your target audience in mind.

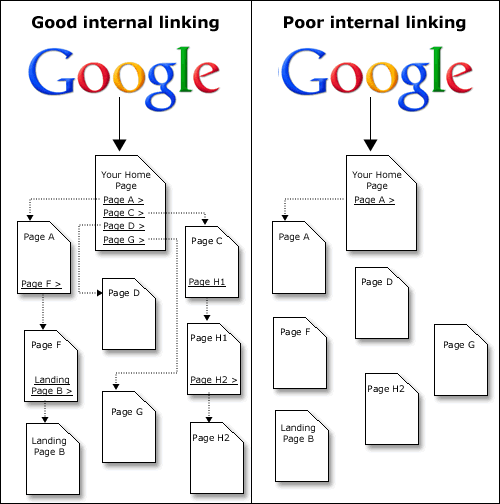

- Internal Linking: Interlink your website’s pages for added benefit to users. This will make it easier for users to find important information and get to your most important pages.

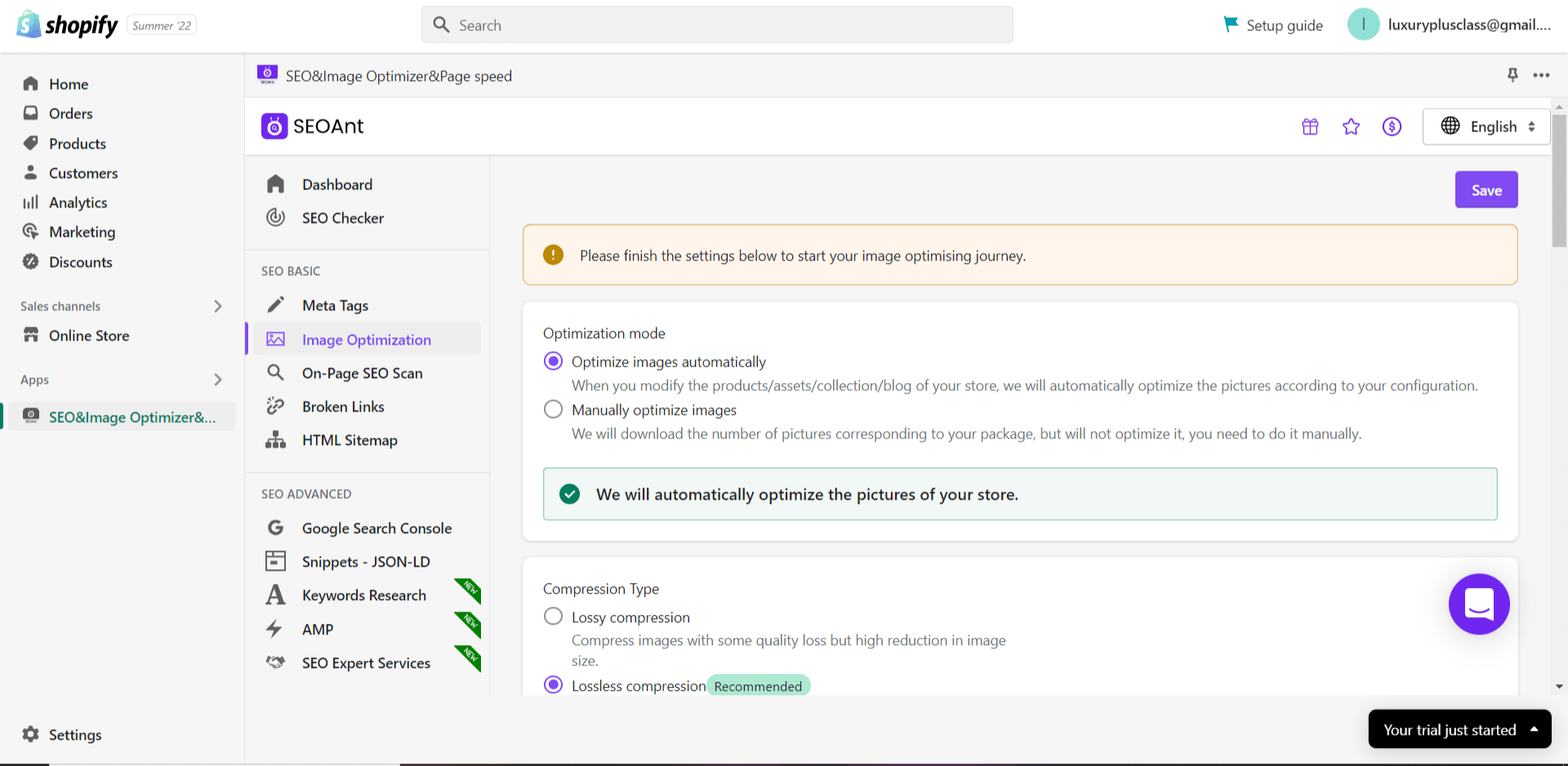

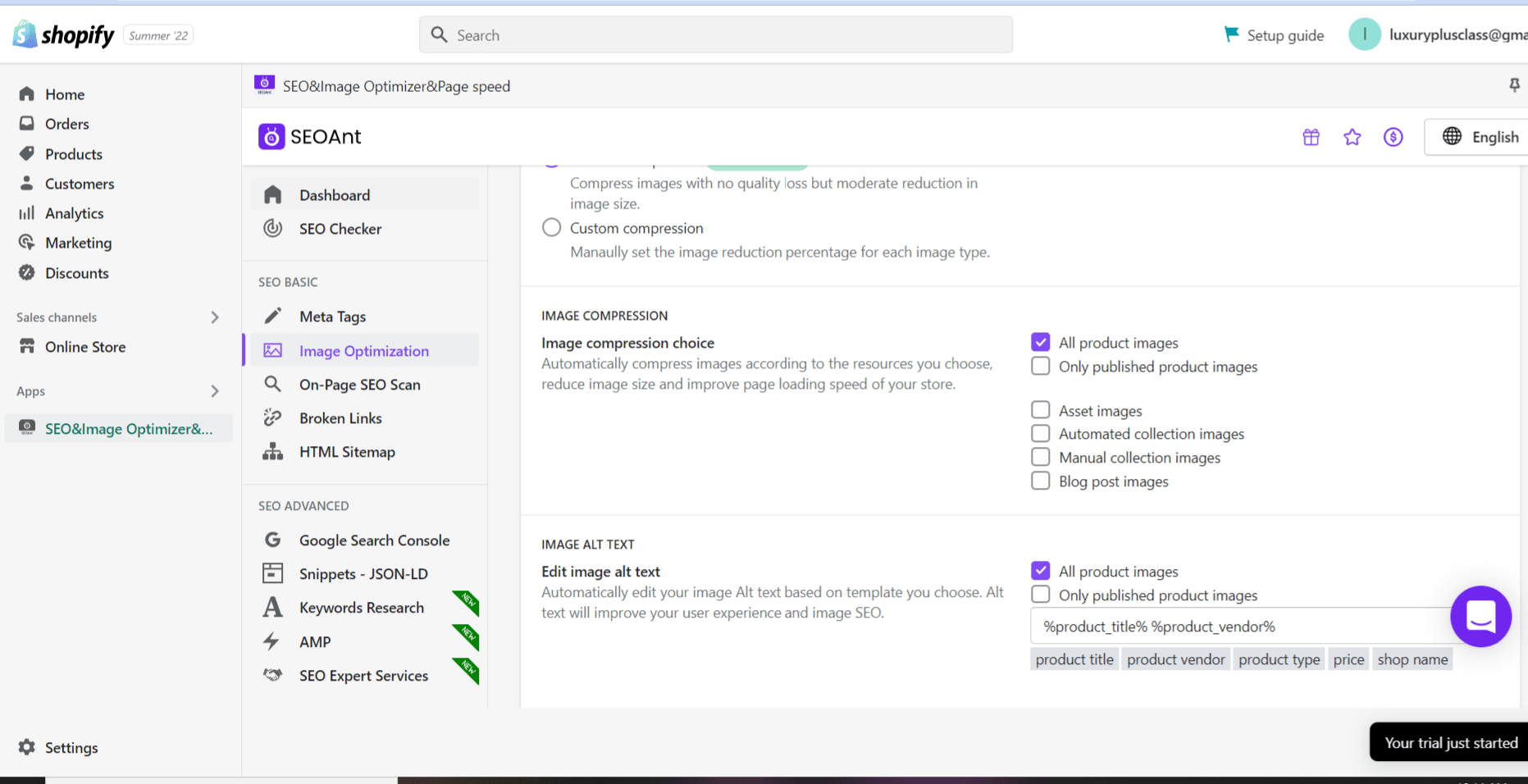

- Optimize your images:

- Ensure that your images contain an optimized alt tag. It would be ideal if you could incorporate your keywords into your alt tags. To improve the speed at which your website loads, you should reduce the file size of your images.

- Structure of URL: Ensure your URLs are simple and concise. The target keyword should be included in each URL. Replace all broken links on your website.

6. Design local landing pages

Websites created specifically for organic local search listings are known as localized landing pages. You should optimize these pages with the geo-specific keywords that you have identified, and the content should include helpful information that will engage your intended audience.

To create an effective landing page, it is essential to include H1, H2, and H3 headings, write targeted body content, including internal links, and optimize for mobile search.

In addition to running paid ads, you can drive organic traffic to these landing pages by using paid ads as well. Don’t forget to include call-to-actions that will entice your visitor to get in touch with you.

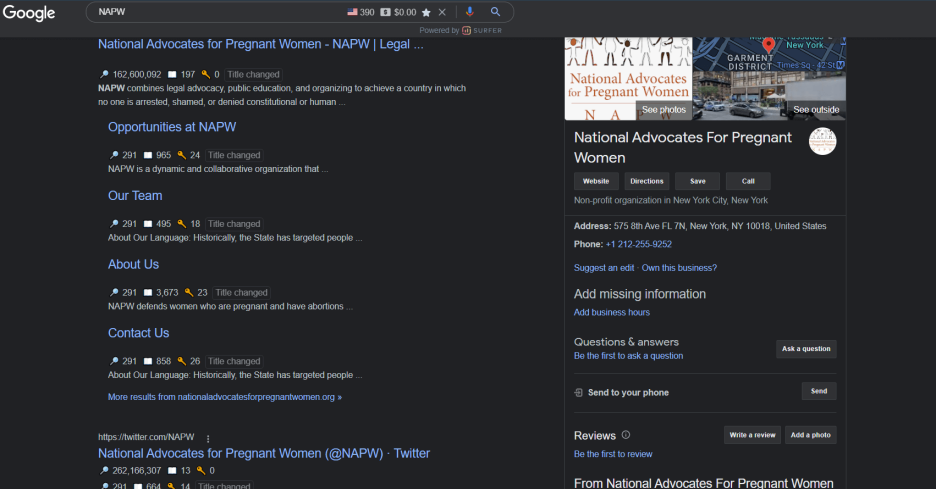

7. Manage your Google My Business listing.

You can use Google My Business to drive more traffic to your business, get customer reviews, and more. You need to have a fully optimized listing to increase the chances of your business being ranked in search.

Claim your business by creating an account or adding a new listing. In addition, you could also provide your business address, phone number, and website URL, as well as images, hours, and other information so that your customers can learn more about you.

GMB is considered to be one of the most valuable local marketing services in the world for a reason. To improve your local SEO over time, be sure to fully optimize your GMB listing by continuously optimizing it.

8. Include key information about your business in local directories.

You can also submit your business information to other online directories in addition to GMB. Directory sites like Yelp, YellowPages, Bing Places, and BBB are among the most reputable, but there are many more on the Internet.

If you would like to provide your information to authority sources and localized directories to drive traffic, earn links, and increase local SEO rankings, then this should be your goal. All directories should display the same business name, address, phone number, and website URL (NAPW).

Add your business to industry-related directories if possible. Nonetheless, remember that not all directories are created equal; do not link to spammy sites or pay for directory listings just to earn a link.

9. Create a localized link-building strategy

A link-building campaign for a local business can look a bit different from a link campaign for a business website. A site’s location is also essential here when it comes to determining if it links to yours.

Ensure that you get links from companies in your area. In addition, to optimize local results, create localized content and ask for localized anchor texts.

You can get backlinks in these ways:

- submit to directories.

- Do outreach.

- Write guest posts.

- Produce content assets.

10. Get positive feedback from customers.

For local SEO, Google My Business reviews are an important ranking factor. As a local business owner, it is imperative that your top goal is to generate as many positive reviews as possible on as many listings as possible – ideally, across all your directories.

It is a great idea to conduct exit interviews with your clients after you have completed your engagement with them. Additionally, you can ask your customers for a review on Google My Business, Yelp, Facebook, or any other site they prefer.

Be sure to respond to any negative reviews. You can make a good impression by being kind and professional. The way you respond to a negative review can speak volumes about your company.

What Is On-Page SEO?

As a budding eCommerce entrepreneur, one basic yet essential tactic to boost your online business is on-page SEO.

On-page SEO uses several technical SEO elements to focus on creating a site and content that will rank highly on search engine results pages whenever there’s a search query related to your line of business.

These strategies will undoubtedly lead to higher conversion rates, which means one good thing—more money for your business.

However, you’ll need to pay the price for the results you want.

You need to combine both content and technical SEO to create your web pages.

In this article, I’ll cover what on-page SEO is and why it is important, noting how you can incorporate this tactic into your web page content.

Without any further ado, grab a seat, and let’s jump right into on-page SEO.

On-Page SEO: What Is It All About?

On-page SEO, which is also called on-site SEO, is the process whereby you create optimized content on your web page for your users and search engines like Google.

There are several different on-page SEO tactics you can use to increase the rankings of your website.

Some examples include optimizing content, title tags, URLs, building links, and so on, which help to boost user experience and increase rankings on search engines.

Why Is On-Page SEO Important?

It’s important to learn and use the best on-page SEO practices because Google regularly updates its algorithm so that it can boost the overall experience of users as well as understand user intent.

Also, Google places a high premium on user experience, which is why you should employ this strategy.

If your web pages are well optimized, Google understands your content better, which will help in the organization and ranking of the website. The most basic of this strategy is incorporating keywords into your content.

Google uses keywords to know if your content is relevant to the keywords in a search query.

However, this doesn’t mean that you should stuff your content with keywords or have no regard for the quality of the content.

Therefore, to carry out effective on-page SEO, you need to consider:

- User experience

- Search intent

- Bounce Rate and Dwell Time

- Click-through-rate

- Page loading speed

How to Optimize Your Content for On-Page SEO: The 12 Essential Factors

There are 12 essential elements you need to know to successfully apply on-page SEO to your business.

We’ll discuss each element under three subheadings:

- Content

- Website Architecture

- HTML

Content

As you well know, content is king and will forever be king.

Content without SEO is like a new car without an engine—it can’t work.

So, what are the essential on-page SEO factors to consider for your content?

1. E-A-T

E-A-T stands for Expertise, Authoritativeness, and Trustworthiness.

It is one method Google uses to weigh your web pages.

When you consider the fact that Google mentioned it 135 times in its 175 pages of Google Search Quality Guidelines, you should understand why it plays an important part in the algorithm for search engines.

What this factor is simply saying is, can your content stand as a reliable information resource on that particular topic?

If it can, then you’ve done a good job ranking-wise.

2. Keywords

The easiest way to show Google and the other search engines that your content is relevant to a user’s search query is by featuring the query keywords in your content.

You can feature the keywords in your headings, body, conclusion, or even all for better results.

For instance, if you are optimizing the content of a web page for a furniture store, you should include keywords like a dining room set, cupboard, sofa, end table, and so on.

Additionally, you should include keywords that users may likely search for.

Don’t forget to include long-tail keywords if your store is a specialized one.

3. SEO Writing

You should create unique content with a focus on not only ranking high in the search engines but also converting the users visiting your site.

How do you achieve both? You can achieve both by using the best SEO practices.

The most essential tips for SEO writing include:

Prioritize Readability: Make it easy for your visitors to get the information they want quickly. Make your content easy to scan.

Use Keywords: As I’ve pointed out earlier, feature keywords in your content, and you should apply the principal target keyword in the first paragraph of your content.

That makes the intent of your content crystal clear to search engines and your visitors right from the start.

Furthermore, if you specialize in a certain aspect of a given niche, you should use long-tail keywords to make your website stand out.

However, it is bad to use keywords discriminately.

You should not overuse keywords, a technique called “keyword stuffing.”

This approach will be detrimental to your site’s ranking as Google places less priority on sites that practice keyword stuffing.

Therefore, your content will rank low on the search engine results page or Google may not feature it at all.

Sentences and Paragraphs: Always endeavor to make your paragraphs and sentences as brief as possible.

When you use unbroken texts, readers will find them very difficult to read.

You should keep the paragraphs and sentences of your content short so that you can retain users and not drive them away.

Subheadings: Don’t forget to use subheadings as they attract the attention of your visitors because their size makes them stand out.

Additionally, don’t forget to use H1 and H2 tags for your titles and subheadings.

They help tell Google the organization of your text.

Lost Items: Additionally, whenever necessary, apply bulleted lists to your content because it makes your content easy to read and scan through.

4. Visual Assets

Applying visual assets like videos, images, and infographics

to your content won’t only make your content more appealing to your visitors, but it’ll also boost your SEO rankings.

A study revealed that 36 percent of prospective shoppers employ visual search when shopping online.

That means if you don’t use visual assets of some sort, you’ll lose out on this significant traffic.

Additionally, incorporate optimization into the accompanying text for completeness in terms of results.

Don’t use images that are too large as this will cause slow loading of your web pages, which will increase your bounce rate.

Lastly, you should ensure that the images are shareable as this will help with backlinking, which is important for E-A-T.

HTML

HTML refers to HyperText Markup Language.

It is a code that Google and other search engines use to impart structure to the content on your website.

These codes inform your visitors’ browsers of the content to display and where to display it.

Search engines like Google and others can also get information about what your content is about and how high or low your web page should rank.

The HTML factors relevant to on-page SEO include:

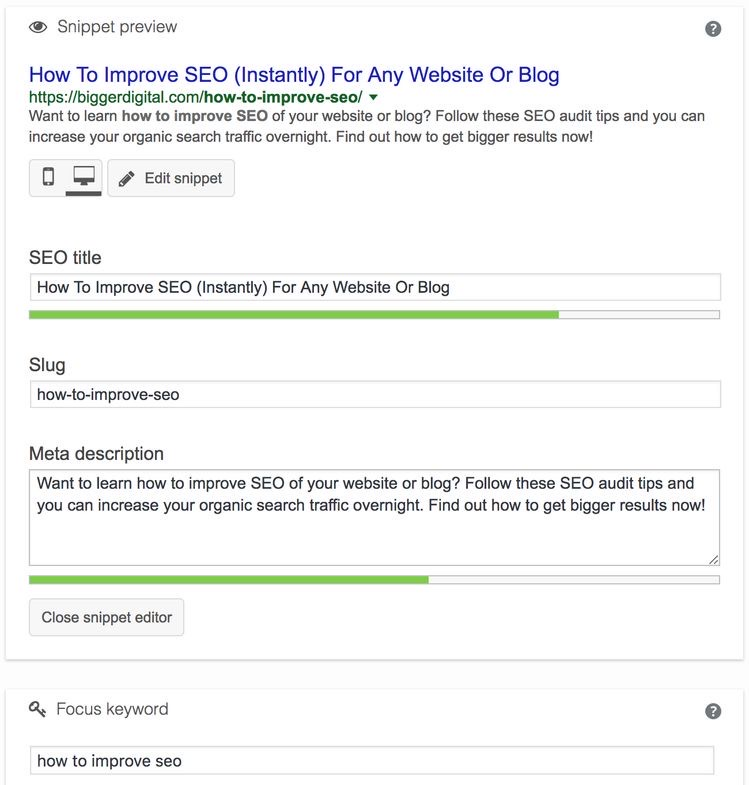

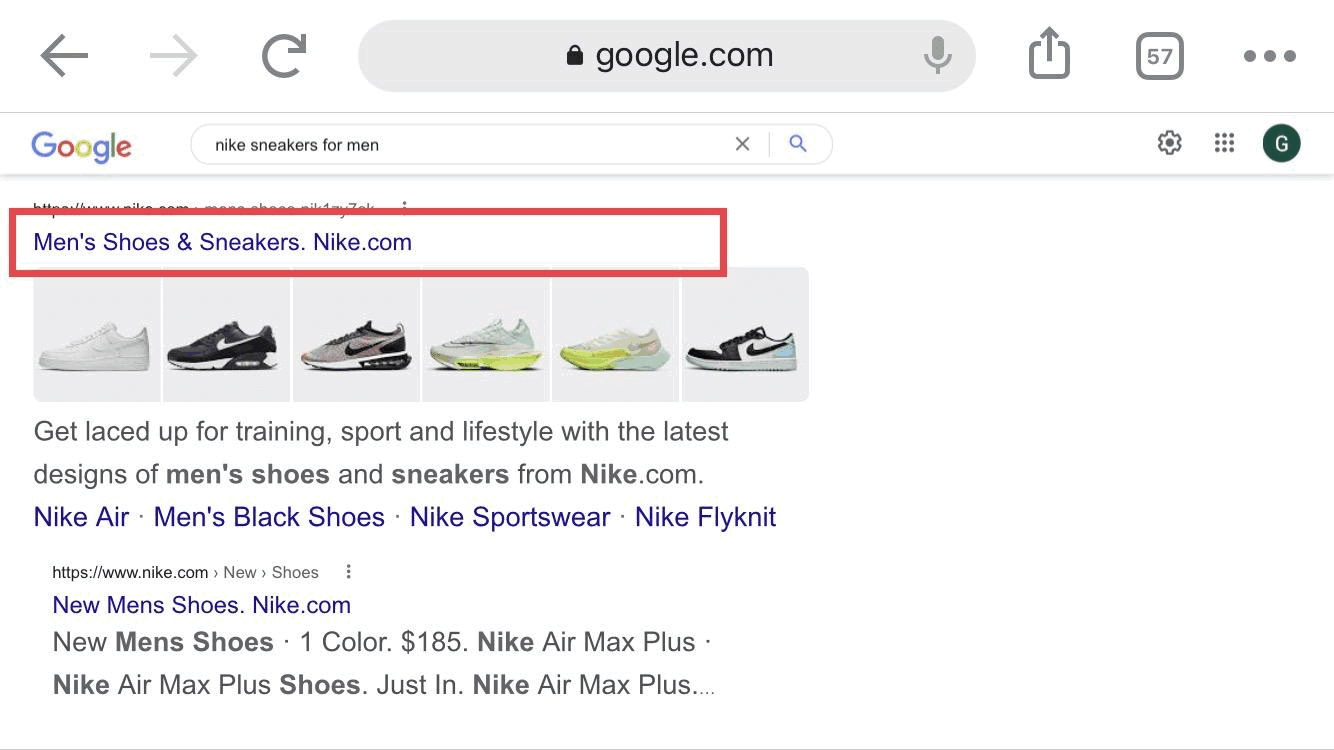

5. Title Tags

Title tags are one key area of HTML you’ll need to focus on.

It is a code snippet that provides your web pages with a title that, together with the other on-page SEO elements, will boost your site’s context and also increase its relevance.

Title tags usually appear in the search engine result page above the meta description but underneath the URL.

It is a ranking factor, giving searchers an idea about your web page’s contents, which increases user experience.

The visitors to your website look at the title tag to see if your content relates to their search query.

For the best on-page SEO practices, incorporate keywords into your title tags; it’ll increase your click-through rates and traffic.

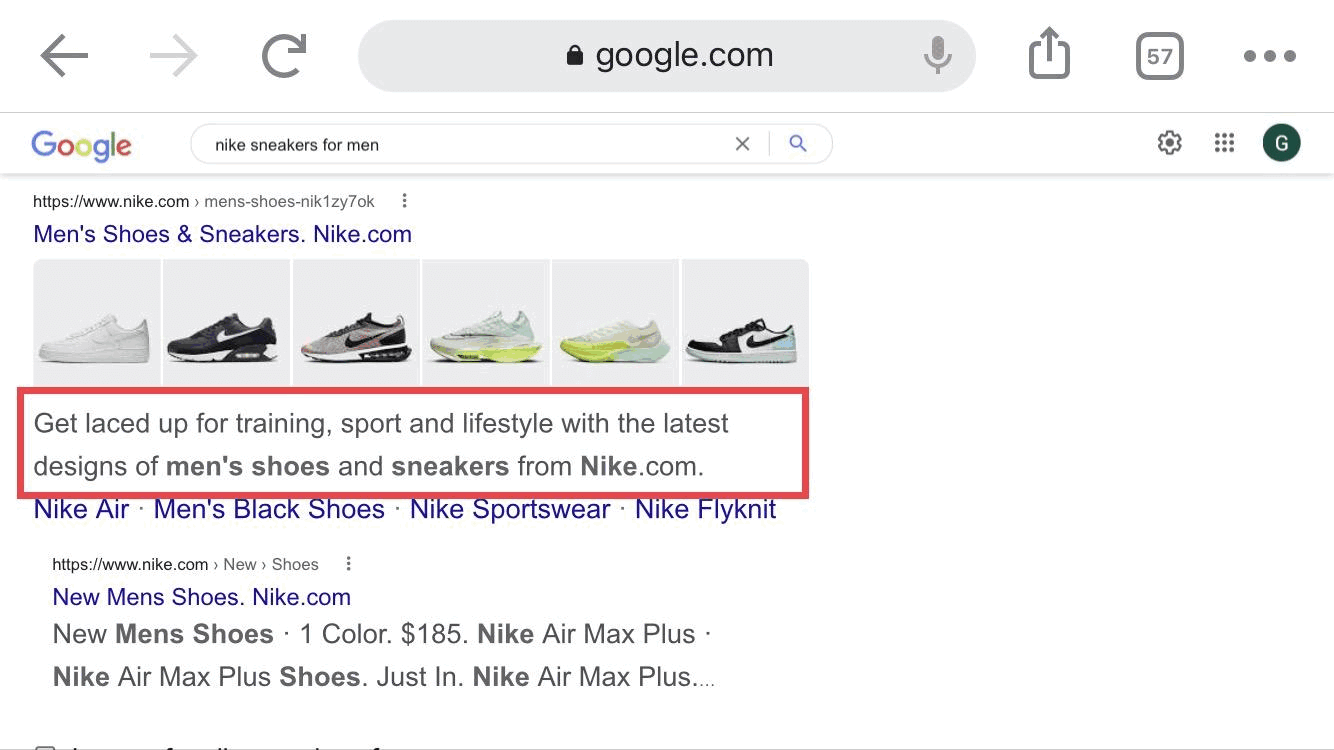

6. Meta Description

Meta descriptions, even though some SEO professionals say they are not important for SEO ranking, they are still very useful.

That’s because they help search engines like Google to know what your content is about and can also increase your click-through rates and contribute to a positive user experience.

If you use meta descriptions well, internet users will understand your web page content better, which will result in more click-through rates.

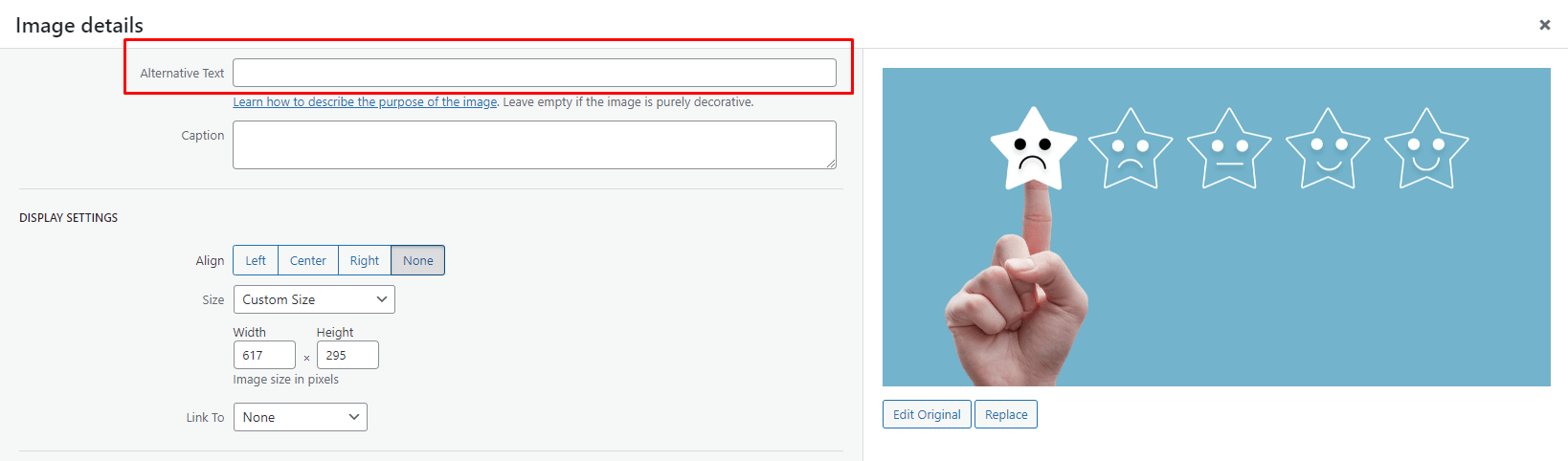

7. Image Optimization

.png)

It’s not enough to use visual assets, you should optimize the images as well.

Some image optimization tips to boost on-page SEO include:

- Use alt tags that are SEO-friendly.

- Make your site load faster by selecting images that are of the right size and format.

- Personalize the image file names instead of using names like IMG-0986.

- Choose images that are phone-friendly

8. Geotagging

Even though the world is a global village, your business still begins at a certain location.

Therefore, it’s important to optimize your content to connect with local users. That will boost your on-page SEO.

This tactic is very essential for medium-sized and small-scale businesses.

The main SEO tactics to consider when focusing on local traffic include:

- Optimize citations and local listings, including names, phone numbers, and addresses.

- Optimize the website URL

- Optimize business descriptions.

- Utilize third-party applications.

- Get reviews

- Optimize and incorporate links with the other relevant organizations and businesses in your locale.

- Incorporate the target location’s name into your keywords and include the keywords in your content.

Website Architecture

You should structure your website well because a website that is well organized logically will feature more on search engine ranking pages and provide users with a better experience.

Here are the website architectural factors you should incorporate for maximum on-page SEO results:

9. Site Speed

If your web pages are guilty of slow loading, you’ll not only drive users away, but you’ll have lower rankings as page load speed is a critical factor search engines consider for SEO rankings.

Measures to take to ensure your web pages load faster are:

- Decrease redirects

- Leverage on browser caches.

- Activate compression features.

- Optimize the images on your web pages.

10. Responsive Design

Ever since mobile search volume beat desktop search volume, Google has prioritized web pages that are amenable to mobile phone searches.

Mobile phone searches make up 56 percent of all internet searches, while tablets contribute a further 2.4 percent.

These figures go to show that it is very necessary to make your website mobile-friendly.

The least you can do is to have a mobile version of your website.

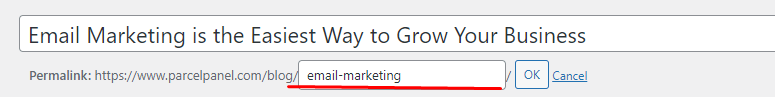

11. URL Structure

Incorporating keywords into your website’s URL was an important SEO ranking factor in the past.

Now, they don’t play that role anymore.

However, that doesn’t mean you should ignore this tactic completely; otherwise, it wouldn’t have been mentioned.

Some SEO professionals theorize that it is still useful in grouping web pages, and so you should employ it too.

When you incorporate important target keywords into the URL, it tells searchers and Google that you’ll cover the topic on that web page.

12. Links

Building links helps to direct organic traffic from Google and other search engines to your site.

When you combine link building with other on-page SEO practices and technical SEO elements, your website will be a force to reckon with.

Links work together with E-A-T to create good on-page SEO.

Establishing links with websites that are reputable is one efficient way of having expertise, authoritativeness, and trustworthiness.

For on-page SEO purposes, there are three basic types of links you should employ:

Internal Links—Internal links lead users from one page to another on your website.

Internal links help users to engage further with your web pages as they spend more time on your website clicking links, which will in turn decrease the bounce rate.

The links also give Google a general idea of your website’s architecture.

Outbound Links: Outbound links are also called external links. They are links that take users to a website on another domain.

Backlinks: Inbound links are also known as backlinks.

They are links that direct other websites to your web pages.

Inbound links are the most important because they direct traffic to your site and give you the best SEO benefit.

However, one setback with backlinks is that they are very difficult to obtain.

Final Thoughts

Everything that has a beginning must have an end.

That said, we’ve come to the end of this article.

I believe if you apply the on-page SEO tactics thoroughly discussed, in no time you’ll see your web pages among the most ranked pages on search engines, especially Google.

What Is Technical SEO and How to Do It Well?

Are you looking to make your website technically optimized for users to have a better experience and for Googlebot crawlers to easily crawl, index, and render your website contents?

Then look no further, as, in this ultimate technical SEO guide, I’ll be discussing the most relevant technical SEO elements, including hreflang tags, page speed, and many others.

Therefore, I urge you to read on.

Technical SEO: What Is It All About?

Technical SEO is a component of on-page SEO and it involves technically enhancing the characteristics of your website so that it can garner higher organic rankings.

It is a critical aspect of the whole SEO web where you employ elements such as crawling, indexing, making your website faster, rendering, and website architecture to make your website more comprehensive to search engines.

With technical SEO, your goal is to optimize your website and your content to meet the technical demands of Google and other modern search engines.

To what end? Better rankings and better traffic.

A lot of business owners and marketers have hopped onto this moving train already, and if you haven’t, you better hop right in for the best results SEO-wise.

Why Is Technical SEO Important?

Technical SEO is an essential arsenal to arm your web pages with because:

- Your site will rank higher on Google and other search engine platforms.

- Better rankings mean higher traffic to your website.

- Higher traffic will generate more click-through rates, which is what you need.

- You retain your customers because of the positive user experience they get.

When an internet user makes a search query on a search engine, say Google, Google’s robots crawl through the internet to find web pages that are most suitable for the search query.

Technical SEO is what makes Google robots find your website easily.

Therefore, you need to ensure that you optimize your web pages fully so that they are mobile-friendly, secure, fast-loading, devoid of duplicate content, and the other technical SEO stuff that you’ll learn shortly.

How Can You Improve Your Technical SEO?

Now that we’ve gotten to the good stuff, here are the most basic technical SEO tactics to employ to have better rankings.

1. Make Your Website Secure

A secure website is a website that is technically optimized. The two go closely together.

You should ensure that your website is safe for visitors and that you guarantee privacy.

One of the most basic things to do to secure your site is to employ HTTPS.

HTTPS simply stands for Hypertext Transfer Protocol and the S represents Security Socket Layer, which is the security protocol that keeps site contents secure.

HTTPS ensures that there’s no interception in the data that is exchanged between the site and the user’s browser.

The SSL certificate ensures that the credentials of your visitors are safe once they log in to your website.

You should make sure your website is HTTPS-compliant because it is a super-important ranking factor for Google and they place a high premium on security.

You can check if your website is secure on most browsers.

Check the search bar, on the left-hand side for a lock. If it’s there, your site is safe.

However, if your site is not HTTPS-certified, then you’ll see a “not secure” tag.

2. Javascript, HTML, and CSS

These are also critical elements of technical SEO that you need to use, and they are all programming languages.

HTML refers to HyperText Markup Language and is the foundation as well as the load-bearing wall of your website.

It provides the basic structure that the browser of your users will require to display the contents of your website.

Javascript is the language that supplies the codes needed by the functional parts of your website. It makes your website elements flashy as well as dynamic.

CSS stands for Cascading Style Sheets and is the language that codes for the fonts, colors, and overall appearance of your website.

Without CSS, your website will not be appealing to visitors.

As you may have noticed, all the elements above contribute to a positive user experience, which is an essential factor for ranking well.

3. XML Sitemaps

The XML sitemap is one of the most critical elements of technical SEO, and it is an XML file that displays all the posts, pages, and content on your site, functioning as a roadmap for Google and the other search engines.

It helps to ensure that search engines don’t miss any notable content on your web pages. The XML sitemap is important when search engines are crawling your website.

The XML sitemap is usually classified into pages, posts, tags, or other types of custom posts.

It also includes their titles, the number of images on every page, the date you published them, and the last date you modified them.

A good technical SEO tip is to only include in your XML sitemap pages, posts, and categories that are important to your website.

Don’t include author pages, tag pages, or any other page that doesn’t have unique content.

Additionally, ensure that with every new page you add to your site or with every update to an existing page, the sitemap automatically updates itself.

4. Structured Data

Structured data is an important technical SEO tool because, with structured data, Google gets a better understanding of your business, your content, and your website in its entirety.

You can describe to Google and other search engines the products you deal in and provide details about them.

Because you structured this description in a certain format, search engines can find and understand them easily, thereby putting your content in a bigger light.

Structured data, besides making your content more comprehensive, helps your content stand out in the search results.

5. URL Structure

You should ensure that your URLs have a logical and consistent structure throughout your website.

That provides your visitors with good information about their location on your website.

6. Duplicate Content

Duplicate content tends to confuse Google and other search engines.

When you have the same content on different pages on your website, it creates so much confusion because the search engines won’t know which one to rank the highest.

Therefore, they rank the pages having the same content lower on the search engine results page.

One bad thing about duplicate content is that you can have this problem without knowing it.

For some technical reason, the same content may feature on different URLs.

Your visitors won’t even know, but the search engine will know because it’ll see matching content on multiple URLs.

One way you can fix this problem is through a canonical link that allows you to point out the original page or the page that you’ll prefer to rank on Google or other search engines.

7. Thin Content

Thin content is articles, blogs, or web pages that do not provide Googlebot crawlers with enough information to work with.

They lack internal links that’ll direct the crawlers to the other related pages on your website.

Since search engine robots use links to discover the contents on your site, when you have no links, it harms your ranking.

If your content doesn’t adequately satisfy a user’s search query, it can also be thin content.

8. Hreflang Tags

This technical SEO tool is very important for websites that have content in multiple languages.

If you have an international website, you need to make your content resonate with people across different cultures and languages.

Therefore, you need a multilingual website. You cannot achieve this effectively without using hreflang tags.

Hreflang tags provide search engines with detailed information about the overall structure of your website and its content.

Through this information, they can provide the right content to visitors, meaning English speakers will get English content, and so on.

Hreflang tags also decrease the chance of having duplicate content on your website and indexing challenges.

Even though it’s understandably difficult to set up, the advantages of setting it up far outweigh the cost.

Therefore, if you want your content to be relevant to visitors from multiple regions, countries, and different languages, employ hreflang tags. It works like magic.

9. Canonical Tags

As I’ve mentioned earlier, canonical tags are an important technical SEO tool for curbing duplicate content.

They are very useful if you have web pages with similar content or pages with minute differences.

Canonical URLs tell Google the original or the main page so that they can rank it with user search.

10. 404 Pages

404 pages are pages that are shown to visitors when they click on a dead link or when the page they want to go to doesn’t exist or has been deleted.

It can be more frustrating for visitors than a slow-loading page. Even search engines dislike 404 error pages as well.

By all means, optimize your website so that there are no dead links on your site.

However, it can be difficult to eliminate all the dead links on a website because websites develop continuously.

To reduce dead links on your site, when you delete a page or change the URL, you should redirect the URL to the new page you created to replace it.

It is also good practice to tell visitors nicely that the web page they wanted to visit no longer exists.

You can also make the experience less annoying by providing easy routes for them to return to the previous page, go to the homepage, or any other important web page on your site.

Additionally, there are tools you can use to remove the dead links afflicting your website.

11. Page Speed

Fast page speed works wonders ranking-wise.

With the average attention span at its lowest for years, nobody has the patience to sit around and wait for your website to load when there are faster alternatives.

A 2016 study showed that as much as 53 percent of visitors to mobile sites leave the website if it doesn’t load within three seconds.

That’s a figure you don’t want working against you.

Therefore, if you have web pages that load slowly, you’ll need to optimize them and make them faster.

Otherwise, visitors will get frustrated with your site and go elsewhere.

A slow website means poor user experience, which ultimately leads to lower search engine results page rankings.

A good tip to make your website faster is to optimize your images and videos so that they are of the optimal size and format.

12. User-Friendly Sites

Making your website user-friendly is a very effective technical SEO tool.

It means you place a high priority on user experience (which Google appreciates) and the structure of the mobile version of your site.

You can make your website friendly to visitors by incorporating mobile-first indexing.

This tactic is very important because Google uses it as a ranking factor.

That means you have a fast, mobile version of your website that has the same content as the desktop version.

Additionally, your mobile version should load very fast, and it should be less than six seconds when you test it using the 3G network.

Popups are not user-friendly and you should avoid them at all costs.

You can also feature Accelerated Mobile Pages on your website for better results, as they make mobile web pages load faster and can boost your click-through rates from mobile users.

Accelerated Mobile Pages is a kind of HTML open-source framework that Google created to assist web developers in building websites that are relevant to mobile users.

This tool is very important because it grants you access to more than 50 percent of internet traffic that uses mobile devices.

Conclusion

We’ve come to the end of this ultimate technical SEO guide.

I know there are many elements and it can be very daunting to implement each one of them at once.

However, you can start from somewhere and employ the others with time, just as Rome wasn’t built in a day.

Finally, as you may have noticed, technical SEO is a big baller in the SEO world, and to rank high amongst the best websites, is a must!

How To Increase Organic CTR

Rank and visibility are essential when it comes to organic search. These two elements directly impact your success as a brand in the online world. Getting your website to appear at the top of search engine results pages (SERPs) is what you should aim for if you want to increase brand awareness, drive traffic to your site, and send users down the conversion funnel.

If you manage an e-commerce site, Optimizing your product page with the right keywords and designing them so they answer users’ questions is essential for increasing conversions and keeping visitors on your site. However, not every page on a website has the same importance regarding organic search; some have a more significant impact than others. This article covers tips that will help you improve your organic CTR and boost your search engine rankings.

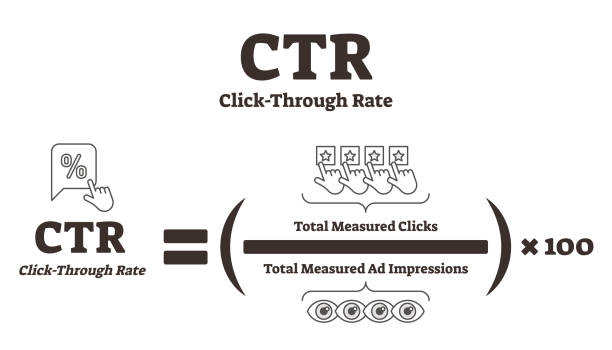

What is organic CTR?

CTR stands for “Click-through rate.” CTR is one of the most critical metrics you need to track, especially if you’re working with a top online marketing company. It measures how many people click on your ad compared to the number of people who see it. The higher your CTR, the better. Organic CTR is the level of engagement your content receives when it appears on search engine results pages. It tracks how likely visitors are to click on one of your links after finding you through the search engine.

Why is organic CTR important?

Organic search is an essential part of any digital marketing strategy, and CTR is what makes or breaks your rank. The higher your CTR, the higher you will appear in the search results, leading to more visibility and engagement. Organic CTR is critical to improving brand awareness, driving traffic to your site, and sending users down the conversion funnel. Furthermore, a high organic CTR can help you dominate your competition and even go up against major brands.

If you have a webpage with great content, you probably won’t worry too much about the CTR of that page. However, if you have a webpage with a poor design or one that does not answer the user’s questions, that page will probably have an extremely low CTR.

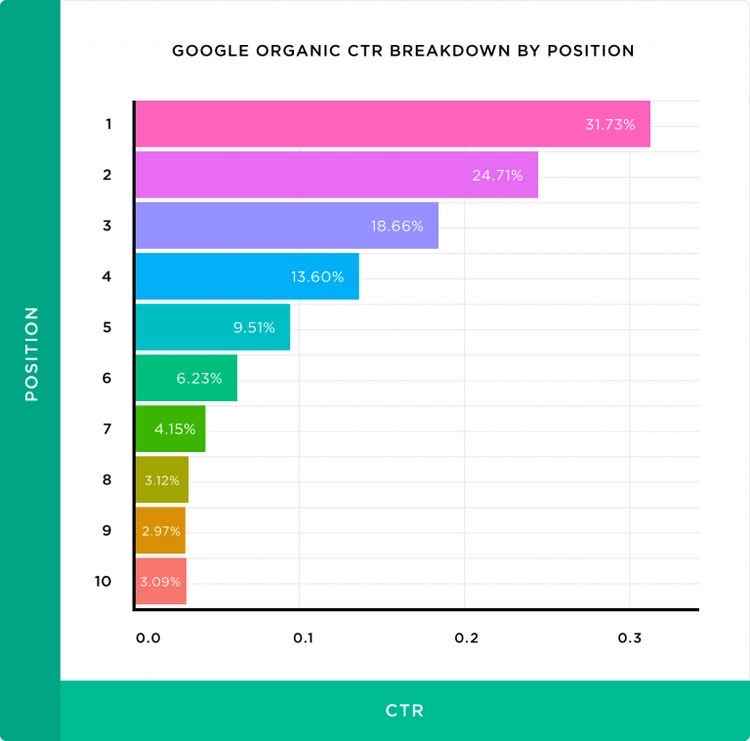

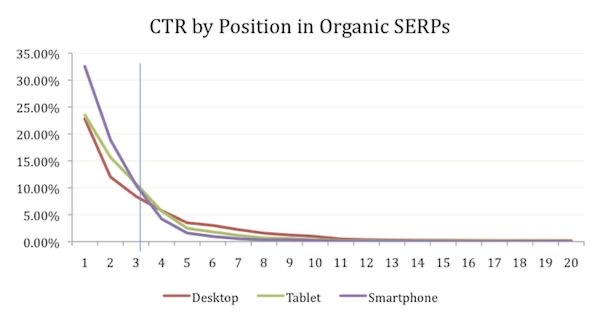

What is a good organic CTR?

The optimal organic CTR depends on your industry and business type. A general rule of thumb is that you should aim for a CTR of 1.5 times your average position, although it may fluctuate between 0.5 and 2.0. You’ll need to beat the first-page average (usually the first position) to get on the front page. An average CTR for the first page is 0.9, and for the second page, it is 0.5. To achieve the first-page position, you’ll need an average CTR of 1.5, meaning you’ll need a CTR of 2.25 for the first position. Keep in mind that the average CTR varies from one niche to another, so you’ll need to analyze your industry and competitors to find out what is a good organic CTR for your business.

The best ways to increase your organic CTR

Many factors influence the click-through rate of your organic listings, such as your content, design, and keywords, but there are five proven tips you should follow to increase your CTR.

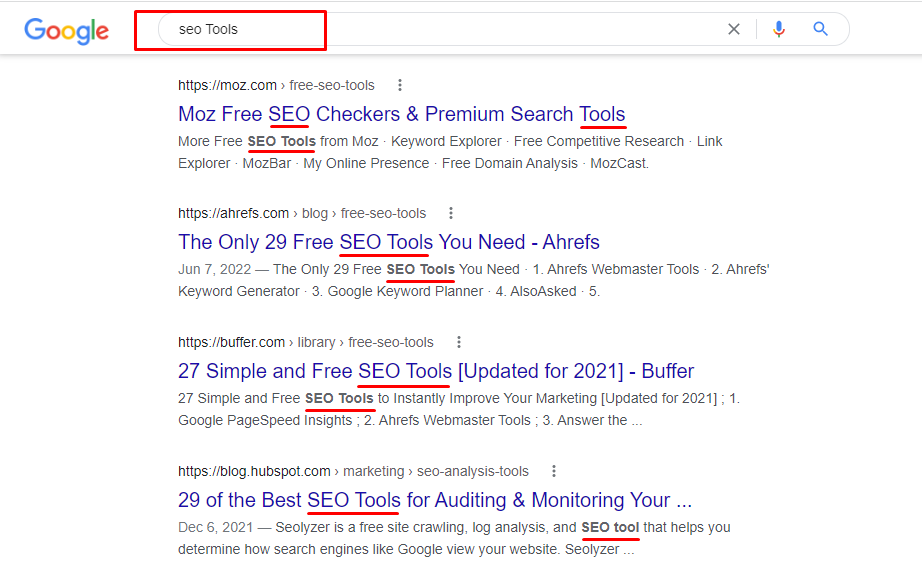

1. Keyword research

The first step towards getting a high CTR and better SERPs is research. You need to know what keywords your audience is searching for, what queries are the most popular, and which terms are most likely to convert. When you know what keywords you should be targeting, you can start optimizing your content with them in mind. Knowing your audience’s keywords will help your website rank higher in the SERPs and increase your organic CTR.

2. Make use of structured markup.

If you’re familiar with SEO, you’ve probably heard about title tags. They’re a set of HTML tags used to structure content on a page and add some context to your web pages. Title tags are an essential part of any SEO strategy, and they’re also one of the easiest things to improve your CTR. According to SEO expert Rachel Wilshaw, choosing the proper tags and including them in your content can increase your CTR by 50%. That’s a huge jump for those who have little experience with SEO, especially those who are just starting in e-commerce. Fortunately, plenty of tools can help you optimize your title and make your content SEO-friendly.

3. Optimize your on-site elements.

Optimizing your content and designing it in a way that answers users’ questions and improves your brand awareness is essential for increasing your CTR. However, not every page on a website has the same importance regarding organic search; some have a more significant impact than others. The elements you need to optimize may include Anchor text. Your anchor text should reflect your target keywords, but it should also be readable and branded.

4. Connect to the audience using emotions.

People buy products and services because of the benefits they’ll receive from them. However, CTR can be increased further if you appeal to your reader’s emotions. The most common emotion-based purchasing triggers are: People are more likely to click on your links if they’re excited about your product or service. You can increase your level of excitement by connecting with your reader’s emotions.

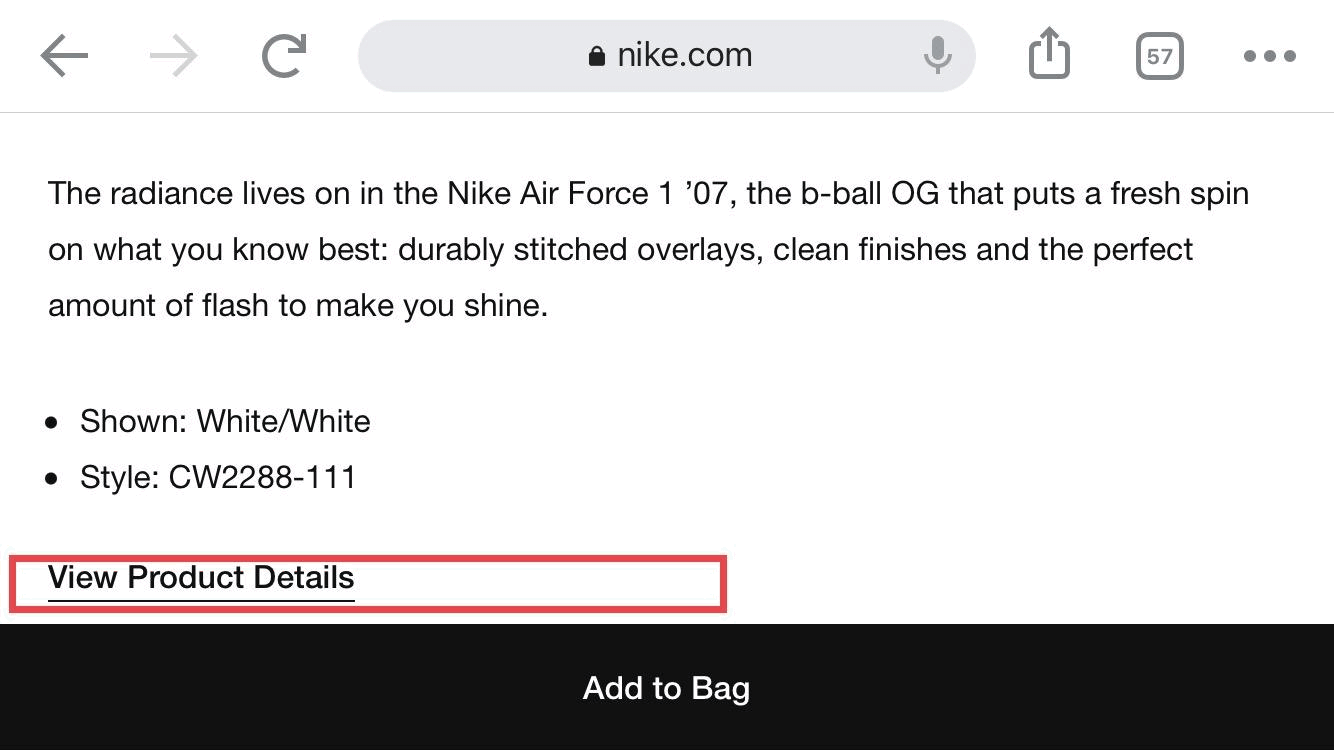

5. Optimize your description.

The first paragraph of your product description is the essential part of your product page. It’s what will persuade a visitor to click “add to cart” and make a purchase. A good description should: The main goal of your description is to answer your reader’s questions and increase your CTR. If they’re satisfied with your product, they’re more likely to click “add to cart.”

6. Use relevant and high-quality metadata.

Metadata is used to create Meta Descriptions and it is the data that describes your content. It appears in search engine results next to your brand name, product, or service. A good title: A compelling description:

7. Use brackets in your title tag.

A great way to attract attention to your product is to use brackets, numbers, and current dates in your title tag. First, type out your title tag and then add brackets, numbers, and the current date at the end of your title. For example, instead of writing “Socks for Winter,” try “Socks for Winter: February 2022” or “Socks for Winter (February 2022)”. By doing this, you are increasing your CTR because you are catching the eye of the visitor and getting them interested in your product.

8. Use descriptive keywords.

Keywords are essential, no matter what kind of website you have. They help your content rank higher in the SERPs, increase your CTR, and make it easier for customers to find you online. However, it would be best to be careful when selecting your keywords. You want to ensure they’re relevant to your content and reflect your target audience’s interests. A great way to find out what keywords you should be targeting is by using a keyword research tool. There are many free tools out there, such as SEMrush and Ahrefs.

9. Use a numbered list.

A numbered list is a great way to present information that’s easy to understand and helps break up the text. It also lets your reader know precisely what part of the text they’re reading and makes it easier for them to navigate through your content.

10. Use recent dates.

If you’re writing about events that happened a long time ago, you risk your content being irrelevant and out of date, which can negatively impact your organic CTR.

11. Use short and meaningful URLs.

If your post is long, you might consider breaking it up into multiple posts. However, if you cannot do that, try to use short and meaningful URLs to keep your readers on your website.

12. Optimize Your Content For Google Rich Snippets

Good rich snippets are boxes that display relevant information about your brand, products, or services. You can use rich snippets to engage your audience, increase your click-through rate, and improve your organic CTR. If you want to learn more about how to optimize your content for rich snippets, check out this article.

13. Avoid clickbait

Clickbait is a type of content designed to get you to click on a link by promising false information or something sensational. For example, a clickbait title might be “This one weird trick will get you the most likes on Instagram ever!” The word “ever” makes it sound like something impossible. Clickbait is bad practice and will only lead to bad PR and low CTR. You should avoid it at all costs if you want to boost your organic CTR and gain more brand visibility.

14. Use Heatmaps to Improve Site Clicks

It’s important to remember that a high CTR correlates to high-quality content. If you want your site to rank at the top of the SERPs and boost your CTR, you need to offer valuable and informative content. A heatmap is a graphic representation of data; in this case, click data. Using a heatmap will help you identify where on your page people are clicking. Heatmaps allow you to optimize your site’s clicks and boost your CTR with little effort.

A heatmap will show you if your readers are clicking on the proper link and the right part of the image. It allows you to see if your page is user-friendly and if it’s answering the questions your users are asking. Heatmaps are helpful because they allow you to identify potential issues on your site, adjust your design, and make it more user-friendly.

Conclusion

Organic CTR is one of the most critical metrics to track if you want to improve your brand’s online presence. Getting your content to rank higher in the SERPs and increase your CTR is not an easy task. It takes time, effort, and consistent monitoring. To boost your CTR, you need to start with keyword research, optimize your on-site elements, and connect with your readers’ emotions. Make sure you use relevant and high-quality keywords and avoid clickbait, so your content appears at the top of the SERPs and increases your CTR.

SEO Guide: What Is a Robots.txt File?

Have you ever built a website only to have it crash? It may be due to the lack of a robots.txt file to guide the activities of crawlers on your site.

The Robots.txt file, when done properly, can improve your SEO rankings and boost traffic to your platform.

That’s why I have scoured the internet to bring you this ultimate guide to robots.txt files so that you can harness their SEO-boosting powers.

Let’s dive straight in.

What Is a Robots.txt File?

A Robots.txt file is a text file created by webmasters to instruct search engine robots such as Google Bots on how to crawl a website when they arrive.

They are a set of rules of conduct simply for search engine bots.

The Robots.txt file instructs internet robots, otherwise recognized as web crawlers, which files or pages the site owner doesn’t want them to “crawl”.

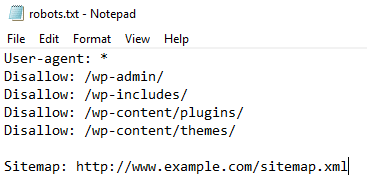

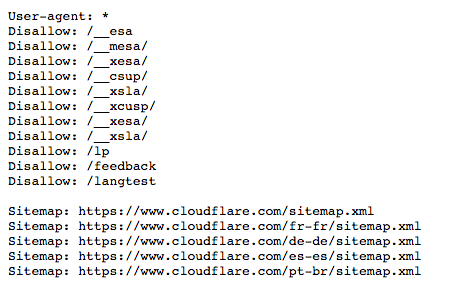

Below is what a robots.txt file looks like:

Robots.txt File – How Does It Work?

Robots.txt files are text files that lack HTML markup codes and just like every other piece of content on a website, they are housed on the server.

You can view the robots.txt file for any website by typing the full web address for the homepage and including /robots.txt.

Example:

- http://www.abcdef.com/robots.txt.

These files are not linked to any other content or location on websites, so there is a zero percent chance that your visitors will stumble upon them.

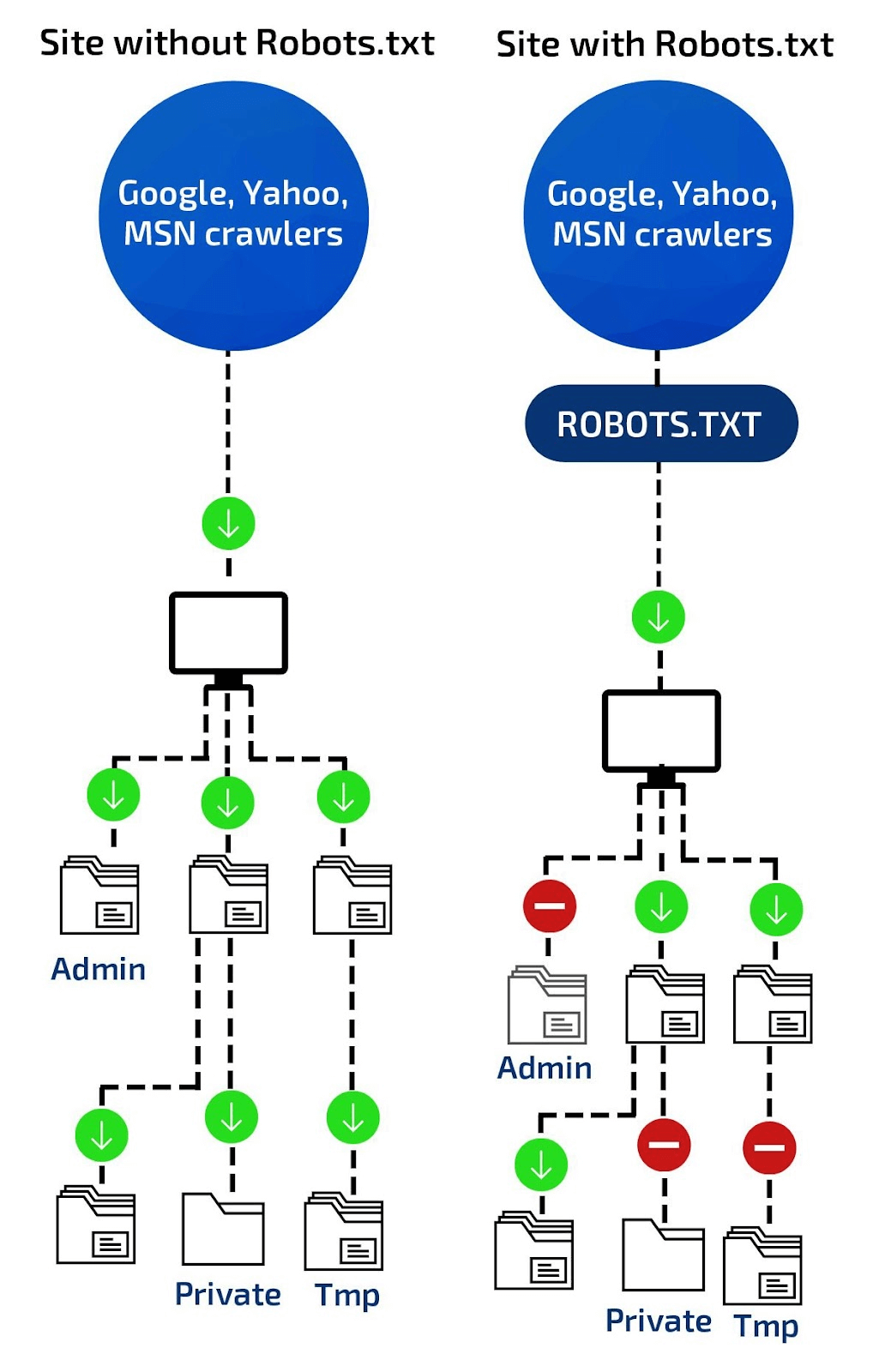

When internet crawlers access your website, before they can make your web pages feature in the search results, they have to index your files and web pages first.

That’s where robots.txt files come in. They provide the instructions for the search engine robots, giving them access to certain files and restraining or blocking them from accessing other files.

To put it another way, the robots.txt file instructs search engine crawlers or “bots” on which pages or files they can crawl and index on your website.

The files do not enforce these instructions, they only provide them. The robots will have to decide if they’ll obey the command or not.

A good bot, like a news feed bot or web crawler bot, will attempt to read the robots.txt file first before attempting to access any additional web pages and will obey the robots.txt commands.

A bad bot, on the other hand, will either dismiss the robots.txt file or filter it to find the forbidden web pages.

Therefore, without this file, your site would be completely naked to any web crawlers, thus awarding them entry to documents and sections you wouldn’t want the public to see.

As you can see from the above image, by using robots.txt files, you restrict web crawlers from indexing designated areas of your site.

It’s important to point out that all the subdomains on your website require robots.txt files of their own.

Therefore, to summarize, a robot.txt file helps to organize the activities of search engine bots so they don’t:

- index pages not meant for public eyes,

- overtask the server housing the website and index pages not meant for public eyes.

Importance of Robots.txt File

Here are some reasons why the robots.txt file is important and why you should use it:

The Robots.txt File Is a Guide for Web Bots.

Robots.txt files function as guides for web robots, telling them what to crawl and index and what not to.

If you create this file in the wrong compartment of your site, the crawlers will not crawl those web sections; thus, those web pages will be non-existent in the search results.

Using this file without sufficient and proper understanding can be problematic for the website.

That’s why it’s necessary to understand the purpose of robots.txt files and the proper way to use them.

Hamper Index of Resources

The Robots.txt file orders search engine crawlers not to crawl designated regions.

Meta directives can function as well as robots.txt files to deter files or sections from being indexed.

Insignificant images, scripts, or style files are suitable files that may be helpful for your site’s configuration but do not serve as “need-to-be-crawled” reserves considering they do not affect file functions.

Obstruct Non-Public Pages

Sometimes, you may need to have pages the public should not see or that robots should not index.

That could be a login page or a staging interpretation of a section.

They must remain on such pages but not be visible to the general public.

In this instance, the Robots.txt file is wielded to constrain the pages from search engines and bots.

These web pages are usually required for distinct functions on your website.

However, they don’t need to be found by every visitor coming to your website.

Optimize Crawl Budget

A crawl budget margin is when the search engine bots are incapable of indexing all of your web pages because of page congestion, copies, and any such misconceptions.

Your web pages have a hard time indexing your web pages, and it is due to crawling budget insufficiency.

Robots.txt solves this issue by simply blocking pages that are not important. If a robots.txt file is present, then Googlebot, or whichever engine is being used, can use more of your crawl budget on suitable pages.

What Subject Is Included Within a Robots.txt File?

The format in which robots.txt files provide commands or instructions is called protocols, and robots.txt protocols are of two main types, namely:

- Robots Exclusion Protocol

- Sitemaps Protocol

The Robots Exclusion Protocol is the set of instructions that tell robots which resources, files, and pages on your website they should not access. It’s the main type of protocol.

These sets of instructions are formatted and are a part of the robots.txt file.

The Sitemaps protocol is the inclusion protocol for the robots that tell search engine robots which resources, files, and web pages to crawl.

This protocol prevents robots from skipping any relevant web pages on your site.

Examples of Robots.txt Files

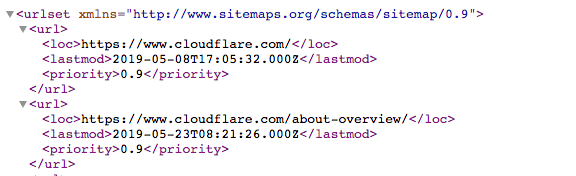

1. XML Sitemaps and Sitemaps Protocol

An XML sitemap is an XML file that comprises a list of all of the pages on your site alongside metadata.

It enables search engine robots to crawl across an index of all the web pages on a site in one spot.

Here is an example of what a sitemap looks like:

However, the sitemaps protocol tells search engine robots what they can include during a crawling session on your website.

Links to all those pages in the sitemaps are provided by the sitemap protocol and it is usually in the form of:

- Sitemap: then the URL of the XML file.

The sitemap protocol is necessary because it prevents web robots from omitting any resources when they crawl your website.

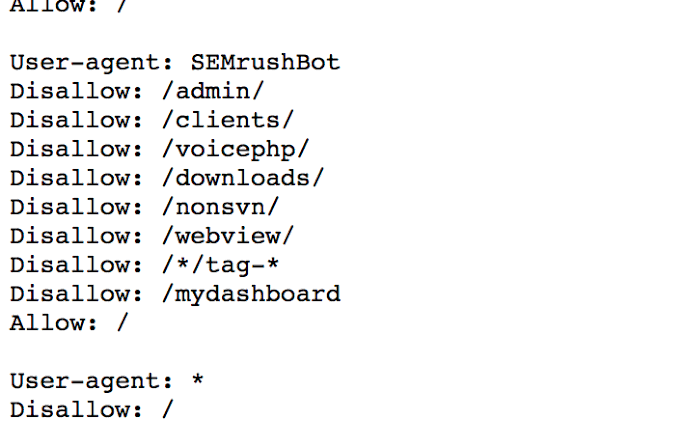

2. Robots Exclusion Protocol

The Robots.txt exclusion protocol is a set of instructions that tell search engine robots the contents, resources, files, contents, and web pages to avoid.

Below is some common robot exclusion protocol:

User-Agent Directive

The first periodic lines in each robots.txt directive are the “user-agent,” which identifies a particular bot.

The user agent is like an assigned name that an active program or person on the internet possesses.

These can be details such as the type of browser used and the version of the operating system for human users.

Personal information is not part of this detail. That helps websites provide resources, files, and other content that is relevant to the user.

For search engine robots, user agents provide webmasters with information about the types of robots that are crawling the web pages.

Through robots.txt files, webmasters instruct specific search engine robots and tell them the pages to include in the search engine results.

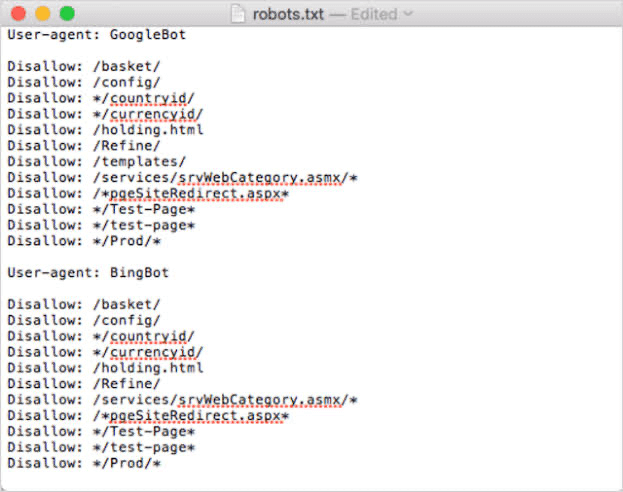

That is, they provide specific commands to robots of the different search engines by composing different guidelines for robot user agents.

For example, if a webmaster wants a particular piece of content displayed on Google and not Bing search engine results pages, that can be achieved by using two different sets of instructions in the robots.txt file.

As you can see from the above example, one set of instructions is preceded by:

- User-agent: Googlebot

The other set is preceded by:

- User-agent: Bingbot

As you can see from the above example, the robots.txt file reads – “User-agent: *”.

The asterisk stands for “wild card” user agent, implying that the command is for all search engine robots and not restricted to a particular one.

The user agent names for the common search engine robots include:

Google:

- Googlebot,

- Googlebot-News (for news),

- Googlebot-Image (for images),

- Googlebot-Video (for video).

Bing

- Bingbot

- MSNBot-Media (for images and video).

Disallow Directive

This command is the most commonly used in the robot exclusion protocol.

Disallow directives tell search engine robots not to crawl certain web pages that comply with the disallow directive.

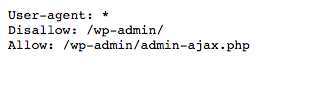

In the example above, the robots.txt file prevents all search engine robots from accessing and crawling the wp-admin page.

In most scenarios, the disallowed pages are not necessary for Bing and Google users, and it’d be time-wasting to crawl them.

Allow Directive

Allow Directives are instructions that tell search engine robots that they are permitted to access and crawl a particular directory, web page, resource, or file.

Below is an example of an allowed instruction.

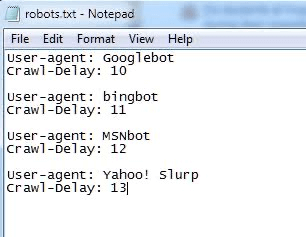

Crawl-Delay Directive

The Crawl-delay directive instructs search engine robots to alter the frequency at which they visit and crawl a site so that they don’t overburden a website server.

With crawl-delay directives, a webmaster can tell the robots how long they should wait before crawling the web page, and it is mostly in milliseconds.

The above image shows a crawl-delay directive telling Googlebot, Bingbot, MSNbot, and Yahoo! slurp to wait for 10, 11, 12, and 13 milliseconds before requesting crawling and indexing.

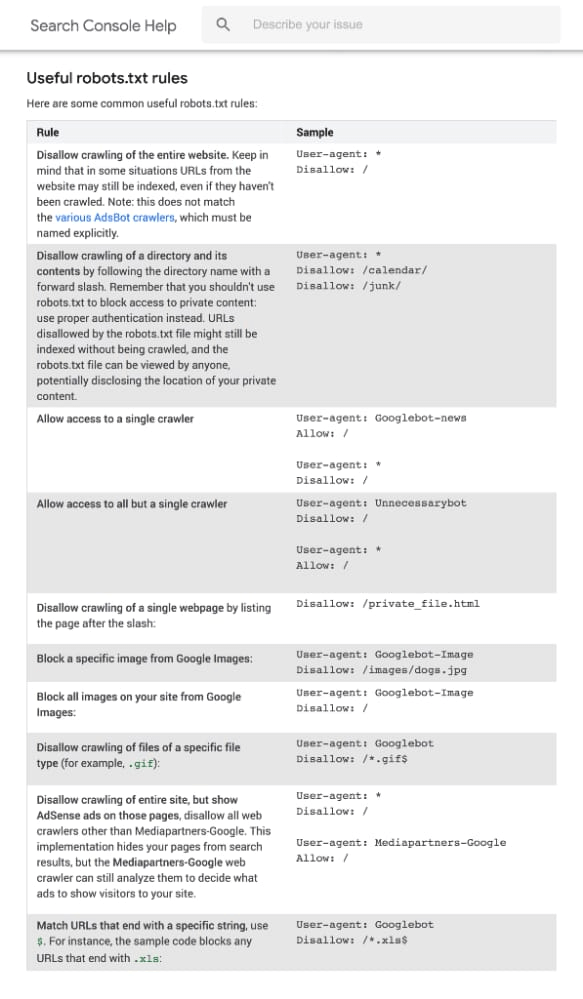

Below are some robots.txt rules and their meanings:

Understanding The Constraints of a Robots.txt File

There are constraints to this blocking method that you should understand before building a Robots.txt file.

Some limitations are as follows:

Crawlers Decipher Syntax Differently.

There is a tendency for each crawler to decipher the directives in the robots.txt file differently.

However, most credible crawlers will fulfill the commands in a robots.txt file.

It is, therefore, important to know the acceptable syntax for handling diverse web crawlers as some may not be able to decipher the instructions in robots.txt.

Some Search Engines May Not Support Robots.txt Instructions

Since the commands in robots.txt files cannot forcefully impose themselves on the response of the crawler, the web crawler has to willingly obey them.

Googlebot and other decent internet crawlers may likely obey the directives in a robots.txt file.

However, that cannot be said of other crawlers.

Therefore, if you would like to store intelligence securely, it is advisable to utilize other blocking procedures, such as protecting classified files on your server with a password.

Pages that Are Forbidden in Robots.txt Can Still Be Indexed if They Are Linked to by Other Sites

Web pages that have certain content blocked using robots.txt will not be crawled by Google.

However, if the blocked URL shares a link with other places on the internet, then it is a different thing entirely.

Search engines can locate and index it nonetheless.

Therefore, the site address and, possibly, other available data can still appear in search engine results.

To appropriately block a page from featuring in the search engine results pages, you should use the password to protect such files on your site.

You can use the response header or no-index meta tag or even eliminate the page.

Best Practices for Robots.txt

Here are some of the best practices for robots.txt:

1. Use Disallow to Curb Duplicate Content

Duplicate content happens on the web due to errors in communication and the location of the robots.txt file on your site’s page.

You can prevent duplicate content by using the “Disallow” directive to tell search engines that those copied editions of your web pages are not to be trudged upon.

Only the original content is crawled and indexed by the robots.

There, the duplicate content is obstructed.

However, if search engines can’t crawl it, it won’t be accessible to users either because of the changes in the location of files on your site.